2017 Volume 57 Issue 11 Pages 2022-2027

2017 Volume 57 Issue 11 Pages 2022-2027

Due to the complicated characteristics of modeling data in industrial blast furnaces (e.g., nonlinearity, non-Gaussian, and uneven distribution), the development of accurate data-driven models for the silicon content prediction is not easy. Instead of using a fixed model, an ensemble non-Gaussian local regression (ENLR) method is developed using a simple just-in-time-learning way. The independent component analysis is utilized to handle the non-Gaussian information in the selected similar data. Then, a local probabilistic prediction model is built using the Gaussian process regression. Moreover, without cumbersome efforts for model selection, the probabilistic information is adopted as an efficient criterion for the final prediction. Consequently, more accurate prediction performance of ENLR can be obtained. The advantages of the proposed method is validated on the online silicon content prediction, compared with other just-in-time-learning models.

The main purpose of industrial blast furnaces is to chemically reduce and physically convert iron oxides into liquid iron called the hot metal. With the increasing need for iron and steel, modeling and controlling the silicon content to increase productivity and reduce potential cost have been widely researched. As an important index of the thermal state, the silicon content in hot metal must be controlled in an appropriate level. However, the silicon content is difficult to be measured online. Additionally, because of complex chemical reactions and transfer phenomena, an accurate first-principles model is still not available for industrial applications.1,2,3,4,5,6)

Alternatively, using the easy-to-measure variables, data-driven empirical models (also called soft sensors or inferential sensors) have been applied to online predict the silicon content.7,8,9,10,11,12,13,14,15,16,17,18,19,20,21,22,23,24,25,26,27,28,29) Without deep process knowledge, data-driven soft sensors for the silicon content prediction can be established quickly. Among them, neural networks (NN) and support vector regression (SVR) are commonly utilized in chemical processes due to their good nonlinear fitting performance.30) However, the structure/parameter selection of NN and SVR is not easy. Additionally, both of them are deterministic methods. Recently, Gaussian process regression (GPR) have attracted more attention in chemical processes.31,32,33) Compared with NN and SVR, GPR can be trained much easier. Additionally, the probabilistic information can be obtained along with its predictions.31,32,33) This property can be utilized as an evaluation criterion for prediction when the actual value is not available. To our best knowledge, there is still not a probabilistic soft sensor applied to blast furnaces. To this end, this work aims to develop a GPR-based probabilistic soft sensor for the silicon content prediction.

Many soft sensor models are assumed that secondary variables are known by process knowledge. However, without process or expert knowledge, it is difficult to choose suitable secondary variables for industrial processes. Additionally, secondary variables should be preprocessing suitably mainly because they often show co-linearity from the partial redundancy in the sensor arrangement and they are combined with process noise.30) Actually, industrial processes are usually driven by a few essential variables which may not be measured. And the measured process variables may be combinations of these independent latent variables. The principal component analysis (PCA) approach is adopted to select the most suitable secondary variables as the inputs of a soft sensor.34) However, PCA can only extract the Gaussian information. Instead of transforming uncorrelated components, independent component analysis (ICA) attempts to achieve statistically independent components in the transformed vectors.35,36) ICA, originally developed for blind source separation, can handle the non-Gaussian information in process data.35,36) Recently, ICA has been applied to non-Gaussian chemical process monitoring and shown better performance than PCA.37)

The issue of efficient selection and analysis of secondary variables was less developed for industrial ironmaking processes. Additionally, in practice, it is more suitable to use multiple local models than only using a fixed one. For these two aims, an ensemble non-Gaussian local regression (ENLR) method is developed using a just-in-time-learning (JITL) way. For online prediction of each query sample, the ICA-based feature extraction method is adopted to suitably select secondary variables in the similar set. Several candidate GPR-based local prediction models are built. Moreover, without a cumbersome parameter selection process, the probabilistic information is adopted as an evaluation criterion for final prediction. These properties make the ENLR method suitable for a relatively long-term utilization.

The remainder of this work is briefly organized. The GPR soft sensing method is described in Section 2. In Section 3, with ICA-based feature extraction, detailed implements of the ENLR online modeling and prediction method are proposed. In Section 4, ENLR is applied to online silicon content prediction and compared with other JITL methods. Finally, a conclusion is drawn in Section 5.

The GPR-based soft sensor modeling method is to approximate a data set {X,y}, where

| (1) |

| (2) |

Using a Bayesian method to train the GPR model, the parameters θ can be estimated. Detailed algorithmic implementations can be found in the literature.31) Finally, for a test sample xt, the predicted output of yt is also Gaussian with mean (

| (3) |

| (4) |

There are two main advantages by using suitable local models than only a global/fixed model. First, it is not easy to construct a global/fixed model with suitable structure for complicated processes. Second, for some processes with complex characteristics and changing dynamics, a fixed model may not be reliable for the long-term utilization. Alternatively, JITL-based local models for nonlinear process modeling are more flexible.33,38,39,40,41,42)

Take the JITL-based GPR (simply denoted as JGPR) modeling method for example. For a query sample xq, there are three steps to construct a JGPR model.33) First, choose a similar set Sq from the historical database S using some defined similarity criteria. Second, build a JGPR model fJGPR (xq) using the similar dataset Sq. Third, online predict the output

However, the input data for construction of a JGPR model may not be Gaussian distribution. The ICA method is adopted to handle this problem. ICA, originally for solving blind source separation problem, is a statistical and computational method for revealing hidden factors that underlie sets of random variables, measurements or signals.35,36) Assume that the kth sample with D measured variables xk = [xk1,···,xkD]T can be expressed as linear combinations of d(≤D) unknown independent components [s1,···,sd]T. Then, for the whitened data matrix Xsim = [x1,...,xn]∈RD×n selected from the historical database using the JITL way, it can be represented as35,36)

| (5) |

| (6) |

An efficient method, known as FastICA,35,36) is adopted to obtain all the independent components. Hyvarinen and Oja validated that the FastICA algorithm is computationally efficient compared to other competitive ICA algorithms.36) Consequently, applying ICA to the whitened data Xsim, the reconstructed data matrix

Different from traditional JITL-based modeling methods in searching similar samples, a simple clustering approach is integrated into the proposed online modeling method. The fuzzy c-means (FCM) clustering algorithm divides N samples into p clusters such that samples within a given cluster have a higher degree of similarity, whereas samples belonging to different clusters are dissimilar.43) It has been widely applied to many clustering problems and has shown superiority to the classic k-means method. For a query sample xq, it is probably that the samples in the same cluster are its similar samples. Consequently, the relevant data can be simply obtained using the FCM clustering method.

There are two user-defined parameters (p,d) in determining an ENLR model. The first one is the number of clusters p (2 ≤ p ≤ 6 for this application case). The second one is the number of ICA-based extracted variables d (2 ≤ d ≤ D, D is the number of input variables and here D = 7). Consequently, there are altogether 30 pairs of candidate parameters (pl,dl),l = 1,···,30. The traditionally cross-validation method is time-consuming. Additionally, only using a model may be overfitting for some regions. Alternatively, ensemble learning methods are attractive in the machine learning area. For several regression applications, the ensemble models have shown better performance than a single regression one.44,45,46,47) Here, using the probabilistic information, a simple ensemble strategy is formulated for the final prediction of ENLR.

For online prediction of a new sample xq, with different modeling samples and parameters (pl,dl),l = 1,···,L, each local model can show its individual prediction performance. Suppose there are altogether L candidate models, each with a prediction

| (7) |

It can be indicated that 0<βq,l<1,l = 1,···,L. Finally, the final prediction using the ENLR model can be obtained.

| (8) |

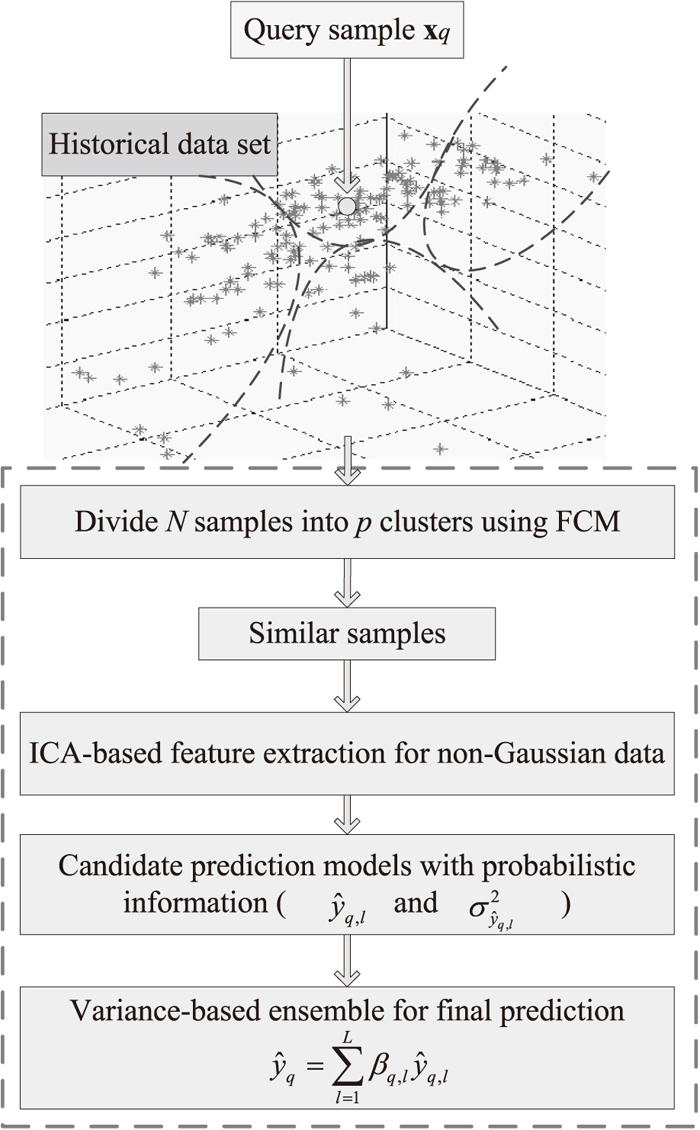

Based on the aforementioned modeling strategies, for a query sample xq, the step-by-step procedures of the proposed ENLR-based online modeling method are summarized as follows.

ENLR Step 1: Collect the process input and output data samples as the historical database, i.e. S = {X,Y}.

ENLR Step 2: For an input measurement in the test set, i.e. xq, first add it into X. Then, the FCM clustering method is adopted to partition {X,xq} into p clusters. Find out the samples with the same cluster with xq. They can be formed as the relevant dataset Ssim = {Xsim,Ysim}. Apply ICA to the whitened data Xsim to obtain the reconstructed data matrix

ENLR Step 3: For a set of candidate parameters (pl,dl),l = 1,···,L, construct several candidate JGPR-based local models. The related weights βq,l for these candidate models can be obtained in Eq. (7). Finally, using an ensemble learning method, make an online prediction

ENLR Step 4: Go to Step 2 and Step 3 and implement the same procedures for online prediction of the next new input.

The main modeling and prediction implementations of ENLR are summarized in Fig. 1. Compared with traditional JITL-based soft sensors, there are two main advantages of ENLR. One is that the variables extraction is integrated into the online prediction framework. The new extracted variables are independent with each other by removing the correlation among the process variables. The other is that the ensemble strategy makes the ENLR method more reliable and efficient when it is implemented in a relative long-term utilization for the silicon content prediction.

The flowchart of ENLR-based online soft sensor modeling method.

The proposed ENLR probabilistic modeling method is applied to online silicon content prediction in an industrial blast furnace in China. There are seven input variables (i.e., D = 7) related to the silicon content, including the blast temperature, the blast volume, the gas permeability, the top pressure, the top temperature, the ore/coke ratio, and the pulverized coal injection.19,20,22) The silicon content is analyzed offline and infrequently. Consequently, the soft sensor is constructed using the online measured variables. After simple removing obvious outliers using the 3-sigma criterion, a set of 340 data samples is investigated. About two thirds of the data (about 230) are treated as the historical set. And the rest data (about 110) are for testing. It should be noted that the noisy data still contain some inconspicuous outliers. As illustrated in Fig. 2, several input variables are nonlinear correlated. And the data in different operating areas are distributed irregularly.

The spatial relationship of several process input variables in the historical data set.

To evaluate the prediction performance, three indices of the root-mean-square error (RMSE), relative RMSE (simply noted as RE), and the hit rate (HR)19,20,21,22,23,24,25,26) are utilized and defined below, respectively.

| (9) |

| (10) |

| (11) |

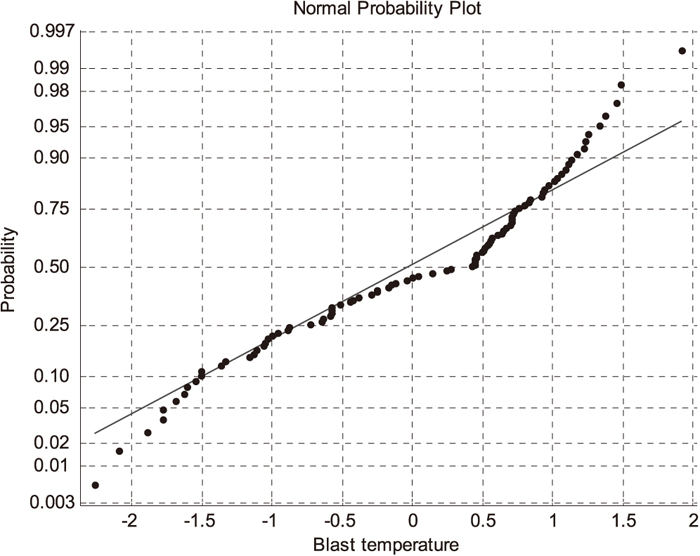

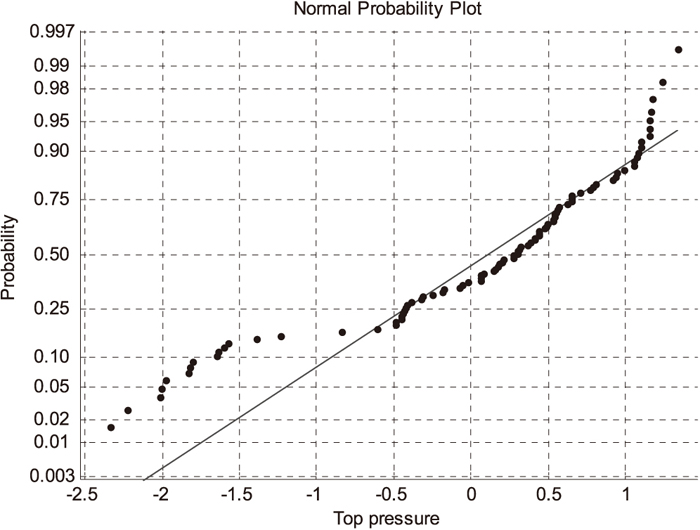

First, for online prediction of a query sample xq, the selected similar samples are analyzed. The normal probability of two input variables, including the blast temperature and the top pressure, is shown in Figs. 3(a) and 3(b), respectively. The distribution results indicate that the process variables violate the Gaussian distribution denoted by the blue dash line in Figs. 3(a) and 3(b), respectively. For most query samples, their similar data sets are non-Gaussian distribution. Consequently, the ICA-based feature extraction algorithm should be applied to preprocess the similar data before construction of a local model.

The normal probability plot of the blast temperature variable in a similar set selected from the historical data set.

The normal probability plot of the top pressure variable in a similar set selected from the historical data set.

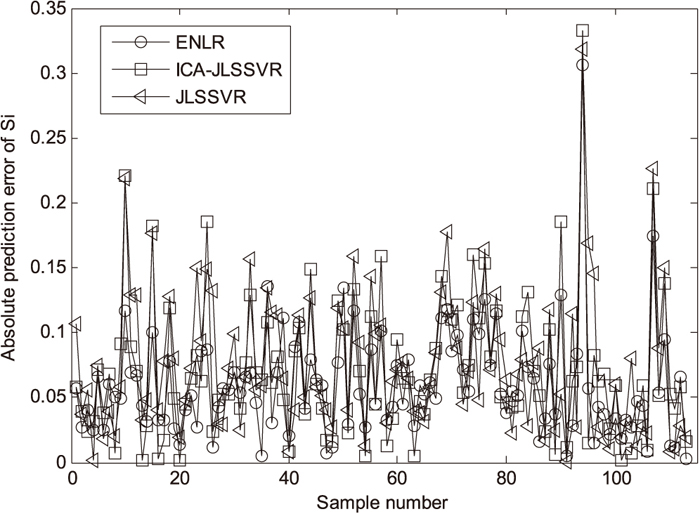

To show the advantage of ENLR, it is compared with three local soft sensors, including JLSSVR (just-in-time least squares SVR),41) ICA-JLSSVR, and JGPR.33) The JLSSVR-based soft sensor applied to silicon content prediction has shown better prediction performance than only using an LSSVR model.41) The ICA and JLSSVR is combined as an ICA-JLSSVR prediction method, with a cross-validation strategy for selecting the model parameters. JGPR, as a probabilistic local modeling method, was previously applied to polymerization processes.33) For the test data, the online prediction values and corresponding absolute prediction errors (|yq−

Comparison results of online silicon content prediction using ENLR, ICA-JLSSVR, and JLSSVR models (test set).

Comparison results of online silicon content prediction error using ENLR, ICA-JLSSVR, and JLSSVR models (test set).

To make the results more reasonable, the above test procedures are implemented for 30 times, each time with a different arrangement of the training data and the test data. The average prediction comparisons of the ENLR, ICA-JLSSVR, JLSSVR, and JGPR methods are listed in Table 1. It shows that the ENLR method achieves the best prediction performance. Additionally, local models with suitable feature extraction are generally more accurate than the ones without feature extraction. For this case study, the prediction accuracy of JGPR is almost the same with JLSSVR and thus the results are not plotted in Figs. 4 and 5.

| Soft sensor model | Brief description | RMSE | RE (%) | HR (%) | Average prediction variance | |

|---|---|---|---|---|---|---|

| Feature extraction | Model selection | |||||

| ENLR | Yes | Probabilistic information-based ensemble learning | 0.077 | 12.63 | 82.3 | 0.08 |

| ICA-JLSSVR | Yes | Cross validation | 0.093 | 14.48 | 73.5 | No |

| JLSSVR41) | No | Cross validation | 0.098 | 15.31 | 69.0 | No |

| JGPR33) | No | Cumulative similarity factor33) | 0.097 | 15.22 | 69.9 | 0.13 |

The main characteristics of four modeling methods are also described briefly in Table 1. It should be noted that ENLR and JGPR are probabilistic modeling methods, while ICA-JLSSVR and JLSSVR are deterministic modeling methods. That is to say, ENLR and JGPR can provide the prediction variance information. This is important for soft sensors because the operators/engineers can know whether the prediction is good or bad before the lab analysis results are available. Moreover, for the average prediction variance of all test data, ENLR is much smaller than JGPR. Consequently, ENLR is more reliable and useful than JGPR. In our opinion, it is unnecessary to further analyze those samples with a very small prediction variance value. In such a situation, human effort can be saved on analyzing data in industrial ironmaking processes. Therefore, from all the prediction results, more accurate prediction and efficient implementations of ENLR can be obtained.

This work has proposed an ENLR soft sensor modeling method for the silicon content prediction when the nonlinear modeling data are non-Gaussian and uneven distributed. Its main distinguished characteristics are briefly summarized. First, the variables extraction and soft sensor are integrated into the online prediction framework. Second, the probabilistic information-based ensemble learning can be efficiently implemented for the final prediction. The superiority of ENLR is demonstrated and compared with other JITL-based methods in terms of the online silicon content prediction in an industrial blast furnace. How to automatically detect and reconcile both input and output measurement biases and misalignments with novel strategies is one of our future directions. It is also interesting to develop probabilistic model-based controller to increase productivity and reduce potential cost.

The authors would like to gratefully acknowledge the National Natural Science Foundation of China (Grant No. 61640312) and Foundation of Key Laboratory of Advanced Process Control for Light Industry (Jiangnan University), Ministry of Education, China (Grant No. APCLI1603) for the financial support.

ENLR = ensemble non-Gaussian local regression

FCM = fuzzy c-means

GPR = Gaussian process regression

HR = hit rate

ICA = independent component analysis

ICA-JLSSVR = independent component analysis-just-in-time least squares support vector regression

JITL = just-in-time-learning

JGPR = just-in-time Gaussian process regression

JLSSVR = just-in-time least squares support vector regression

LSSVR = least squares support vector regression

NN = neural networks

PCA = principal component analysis

RE = relative root-mean-square error

RMSE = root-mean-square error

SVR = support vector regression