2023 Volume 4 Issue 2 Pages 50-59

2023 Volume 4 Issue 2 Pages 50-59

Waste pollution detection has emerged as one of the crucial environmental concerns in recent years, and the accuracy of this practical application has been significantly improving with advancements in deep learning (DL) algorithms. To efficiently detect and quantify waste over large areas, the use of unmanned aerial vehicles (UAVs) has become essential. However, UAV flights and real-world image collection pose challenges that demand expertise, significant time, and financial investments. These challenges are particularly prominent in specialized applications such as waste detection, which rely on large amounts of data. Notably, the availability of adequate and accurately labeled data is vital for the performance of object detection models. Therefore, the identification and acquisition of suitable training data are critical objectives of this study. While ensuring data quality, AI-Generated Content (AIGC), specifically derived from Stable Diffusion, is emerging as a promising data source for DL-based object detection models. This research employed the Stable Diffusion to generate images by utilizing the prompts generated from specified images. Subsequently, the public dataset-based existing trained model automatically labeled the AIGC, which were then assigned corresponding labels in a uniform ratio for training, validation, and testing purposes. To assess the performance differences between the generated dataset and the dataset collected from real-world scenarios, several benchmark datasets were used for accuracy evaluation in this work. The results revealed that the AIGC exhibited superior accuracy in identifying high Ground Sample Distance (GSD) targets in simple backgrounds compared to the realistic collected dataset (F1 score-based). The results demonstrate the potential of AIGC in providing data for object detection models.

Recently, waste pollution in water ecosystems has emerged as a global environmental problem. One of the primary factors contributing to its occurrence is the phenomenon of indiscriminate waste dumping. And monitoring waste pollution along riverbanks with a better cost-performance way is an emergency need for the riparian management. In response to this issue, drones and artificial intelligence (AI) technologies, including You Only Look Once version 5 (YOLOv5), have been employed for the study of waste pollution monitoring at riverbanks1), 2).

These technologies have provided valuable insights into the extent of waste pollution. Nonetheless, certain challenges remain, such as the scarcity of data required for training the YOLOv5 due to difficulties in collecting high-quality drone images featuring specific waste targets.

In particular, as depicted in Fig.1 (left), the collection of Real World Dataset necessitates a significant amount of equipment, such as UAVs, and the placement of specific targets on the site, such as Bikes, Cardboards, PET Bottles, and Plastic Bags. Due to the limitations of only using the Real World Dataset for model training, the YOLOv5 in Fig.1 (right) can just focus on the features in the limited dataset, which may lead to misclassification of other targets that have been not included in the training (i.e., non-universal training).

As shown in Fig.1 (top), one of the open-source image-based generative AI models, Stable Diffusion3) model, that uses deep learning (DL) text-to-image technology. It is designed to generate detailed images based on text descriptions (i.e., prompts) and can also be utilized for tasks like image to image (i.e., img2img) translation guided by prompts. In this study, the Stable Diffusion Dataset was generated based on the features of the targets in the Real World Dataset.

It is important to note that the quality of the Stable Diffusion Dataset primarily depends on the well-performed and accurate prompts. And it cannot be stable-generated just based on the Real World Dataset directly using img2img function. These unstable outputs may have contributed to lead to the unreliable trained model.

Although the aforementioned issues have existed in the practical application, there has been no comparison conducted to assess the trained YOLOv5 derived from the Real World Dataset and Stable Diffusion Dataset using a benchmark-based evaluation approach. In this research, the authors focus on the possibility of replacing or enhancing the Real World Dataset with the Stable Diffusion Dataset during the training of the YOLOv5 for the detection of real targets in practical waste pollution detection.

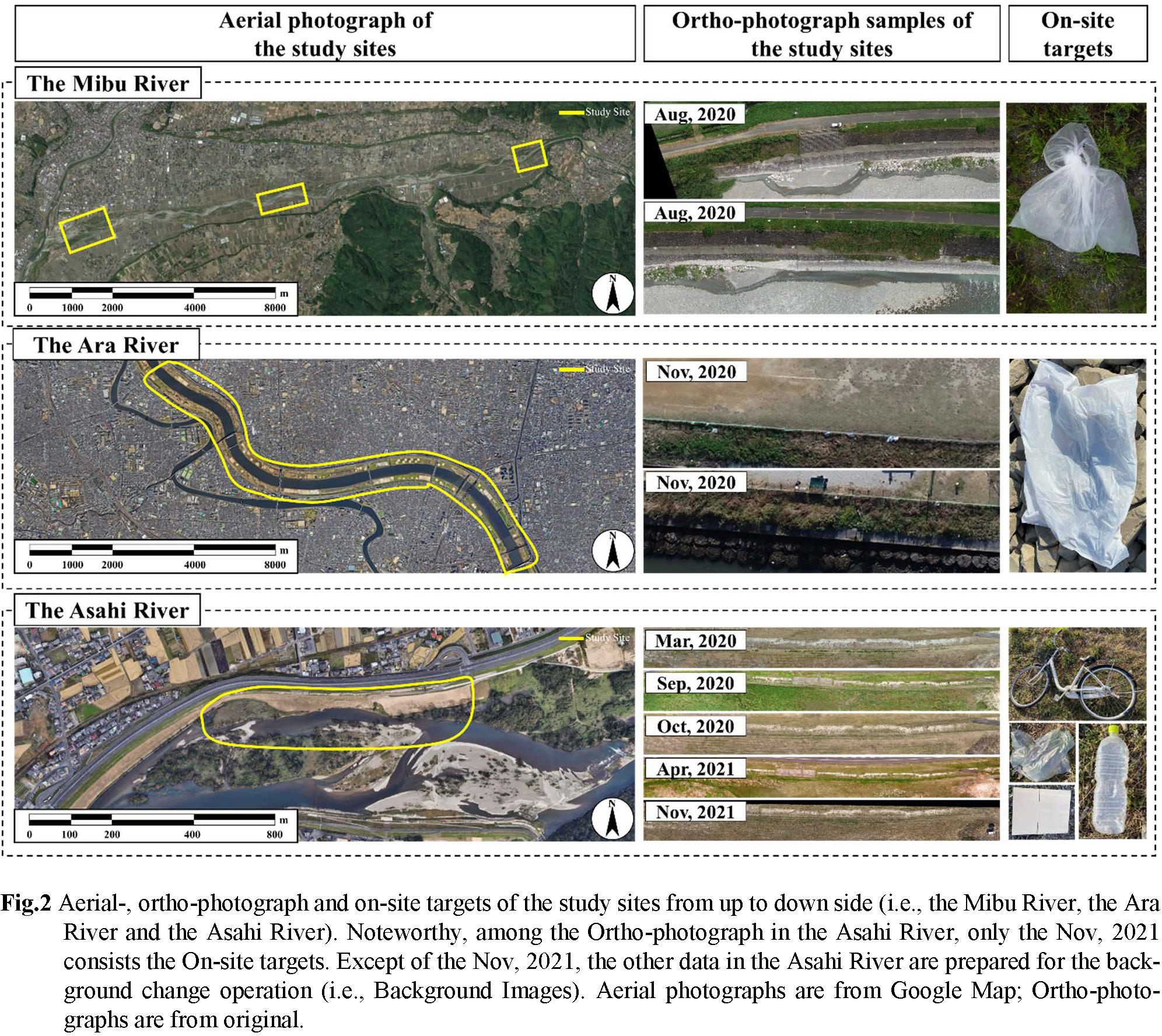

(1) Study Sites

Fig.2 (left) displayed the aerial photographs of the study sites, which are located in the Mibu River, the Ara River and the Asahi River, from up to down sides, individually. And these three state-controlled first-class rivers in Japan that flows through Nagota, Tokyo and Okayama Prefecture. To understand the detailed situations of these sites, thus in the Fig.2 (middle), ortho-photograph samples are also performed in this work. The Fig.2 (right) showed the on-site targets in this research of individual location. And the targets are mainly around Bikes, Cardboards, PET Bottles, and Plastic Bags.

(2) Flow Chart of Research Process

Generally, this research is separated into four main sections in the Fig.3. In the Fig.3 (left), AIGC-based Model is mainly derived from the Stable Diffusion Dataset that is generated by the txt-based prompts (i.e., txt2img). The first step of generating the images with features in need is to capture the images that are matching the requirements. Then applying the website with img2prompt function to extract the information of the images (i.e., CLIP Interrogator online version in this research). CLIP (Contrastive Language-Image Pre-training) is a neural network trained on a variety of (image, text) pairs, that can predict the most relevant text snippet given an image4). CLIP can be instructed in natural language to predict the most relevant text snippet, given an image, without directly optimizing for the task, similarly to the zeroshot capabilities of GPT-2 and 3. Worth mentioning, these prompts derived from the CLIP Interrogator can just provide approximate information. For the AIGC with more detailed information, Prompt Engineering is necessary. In this research, after the comparison of several AIGC samples derived from the Prompt Engineering, the key words that can indicate the reasonable results have been confirmed (e.g. UAV, 8k, super detailed and high resolution).

Based on the AIGC derived from the Stable Diffusion (i.e., txt2img function), the annotation Generations are also important for the model training. All the annotation generations for the AIGC are based on the public dataset-based garbage reorganization standard5) with similar feature. After collecting the AIGC and corresponding annotation generations, the authors used the Roboflow (i.e., an online platform to pre-process the dataset) to preprocess the AIGC-based Dataset.

Continually in the Fig.3 (right), Real-World UAV-derived Images are separated into three parts (i.e., train/valid and test part). And 4cls RMD-based Trained Model is derived from the train/valid part in this dataset. Remarkably, annotation generations were mainly based on the practical situation of the on-site targets.

Shown in the Fig.3 (down), several targets were extracted from the Real-World UAV-derived Images, and combined the images without targets to generate the images with the Background Change. And the Bikes targets are not enough, the supplement of the Real World Dataset (i.e., Bikes) are necessary. After the generation of the annotations, the AIGC + 4cls RMD-BC-based Model can be trained based on the dataset combination of the AIGC and 4cls RMD-BC-based Dataset. In general, as performed in the Fig.3 (middle), the mentioned trained models need to be evaluated by the following three datasets: UAV-BD6), UAV-PWD7) and 4cls RMD (test part) for the evaluation criteria, individually.

For understanding the sections in the Real World Dataset, the Fig.4 explained the relationship among each section in the dataset. Firstly, Real-World UAV-derived Images have two sections (i.e., 4cls RMD with train/valid/test parts, 4cls RMD-BC with background images and targets). Except of the mentioned images, there are also Targets (i.e., Bikes) existing for the supplements.

(3) Models

Mainly the Stable Diffusion consists of three main components: the variational autoencoder (VAE), U-Net, and an optional text encoder. The Stable-Diffusion-v1-5 checkpoint used in this research was initialized with the weights of the Stable-Diffusion-v1-2 checkpoint8) and subsequently fine-tuned on 595k steps at resolution 512px ×512px on "laion-aesthetics v2 5+" (i.e., 600M image-text pairs with predicted aesthetics scores of 5 or higher in the LAION 5B dataset) and 10% dropping of the text-conditioning to improve classifier-free guidance sampling.

The You Only Look Once (YOLO) version 5 model (i.e., YOLOv5), which is an open-source software based on convolutional neural networks (CNNs) with optimal detection accuracy and reasonable computational complexity. Based on the mentioned issues, YOLOv5 was chosen as the model for object detection training model in this work.

(4) Datasets for training/validation

Quality and quantity of the AIGC were mainly controlled by the model-related parameter setting in the Stable Diffusion web UI. The model-related parameters setting were mainly adjusted derived from the total computational time-consuming and VRAM (i.e., GPU memory). The generated samples are performed in the Fig.5 derived from the specified prompts. As performed in Table 1, the prompts used in this research include three main components: subject, resolution, view angle, and area.

The images of the 4cls RMD were taken by multiple drones (i.e., Inspire2, Phantom4 Pro, Zenmuse X4s) with different sensors (i.e., Zenmuse X4s and Z3) on three riparian areas using multiple camera angles (i.e., 45°, 60°, 75°) and GSDs (i.e., 2-, 3-, 4- cm). As performed in the Fig.6, the before-mentioned four garbage are all concluded in the sample images.

As the supplement of the AIGC, the 4cls RMD-BC followed the steps in Fig.7. Extracting all the Plastic Bags and replacing the background using anther UAV-derived image without Plastic Bags. As a final point, cropping the background-changed images into pieces, and overturning the same operation on the other targets. Shown in the Fig.8, there are thirteen kinds of backgrounds have been collected for supplement. Worth mentioning, not only natural also artificial environment has been collected in the dataset.

As displayed in the Table 2 (1) & (2), three cases with specificed image numbers have been considered in this research for confirming the effect of the AIGC in detecting the Real World Dataset. Case 1 and Case 2 consist the Stable Diffusion Dataset, and Case 3 is totally derived from Real World Dataset.

(5) Model-related parameter setting

The details of the parameters setting derived from the Stable Diffusion and YOLOv5 have been performed in the Table 3. The Stable Diffusion is using the pre-trained model that was downloaded from the Hugging face (i.e., v1-5-pruned-emaonly.safeten-sors), is an American company that develops tools for building applications using machine learning.

(6) Evaluation method

As shown in the Table 4, the binary confusion matrix has four entries: the number of true positive (TP) and true negative (TN) samples, which are respectively those that are correctly detected as positive and negative, and the two error categories of false positive (FP) and false negative (FN) samples, which represent the number of negatives incorrectly detected as positives.

When using the YOLOv5 to detect the garbage, it is important to choose evaluation measures for this object detection task. Here, as shown in the Equation (1) & (2), both Precision and Recall should be considered as the measure that the model can accurately detect the garbage or not, Precision and Recall value depend on the factors from the Table 4 basically. And Equation (3) performed the harmonic mean of Precision and Recall, that is main evaluation criteria in this research.

The mean Average Precision (mAP) in Equation (4) provides an overall assessment of the YOLOv5’s performance in detecting the garbage accurately and consistently derived from Precision and Recall. mAP50 and mAP50-95 are two variants of the mAP metric, where the numbers indicate the IoU threshold used for evaluating the model. The mAP50 uses an IoU threshold of 0.5, while mAP50-95 uses a range of IoU thresholds from 0.5 to 0.95.

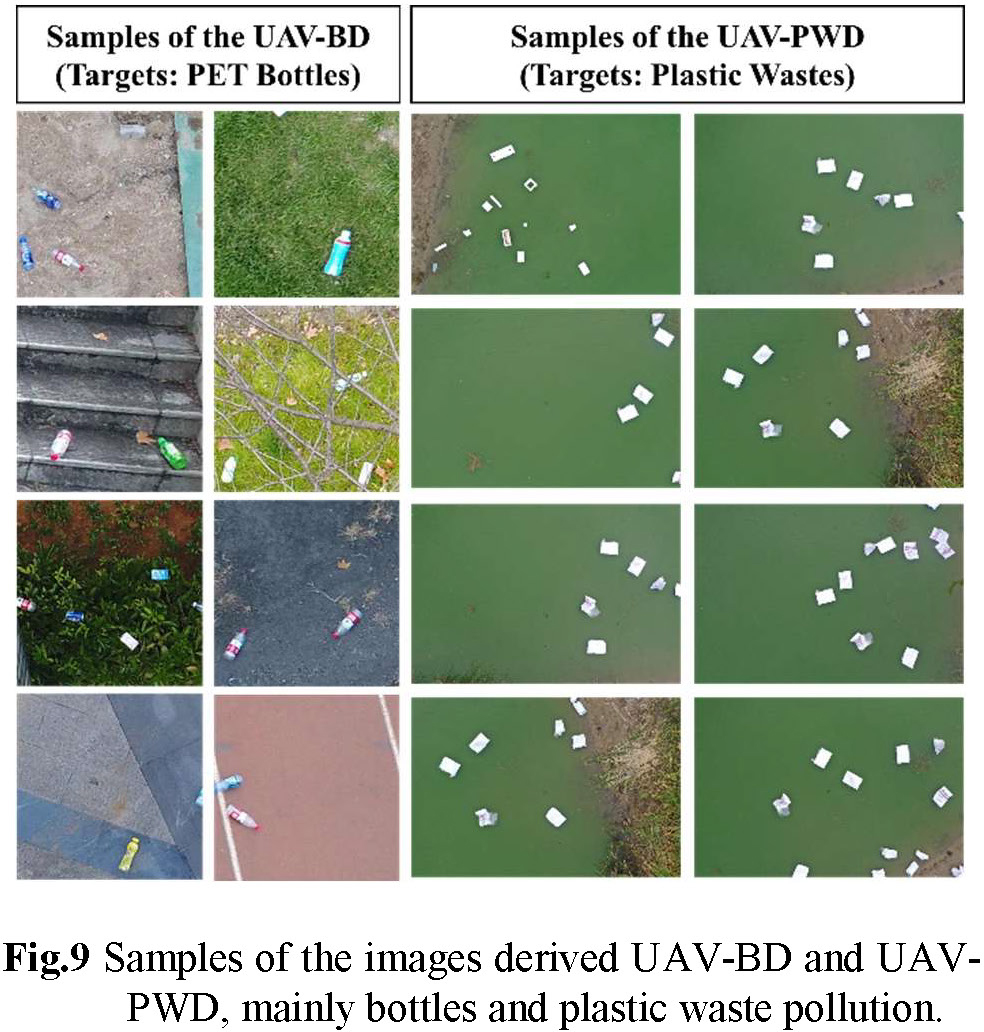

(7) Datasets for testing

Except for the 4cls RMD (test part), two public datasets have been prepared for testing. Fig.9 performed the samples of the images derived from UAV-BD and UAV-PWD. UAV-BD has eight types of backgrounds to be selected to collect the images (i.e., Ground, Step, Bush, Land, Lawn, Mixture, Sand, and Playground). And UAV-PWD has just one type of background (i.e., water area) without the complex feature. Compared with the complicated color and textures of the backgrounds in UAV-BD, UAV-PWD is comparably much simpler than UAV-BD. In other words, UAV-PWD has a simple background than UAV-PWD. Based on the results derived from these two test datasets, this work can measure the ability of the AIGC-based models to detect the targets both in simple and complex backgrounds.

This study is mainly discussing waste pollution detection using UAVs aided with deep learning algorithms. And the authors also explored the challenges of collecting and labeling training data for waste pollution detection models and introduce AIGC as a potential data source. The Stable Diffusion, a text-to-image model, is used to generate images based on specified prompts.

The prompts are derived from the existing images, and the AIGC is automatically labeled using a pretrained object detection model. The generated dataset is then utilized to train object detection models for the detection of the waste pollution. In summary, this study compares the performance of the AIGC-based Dataset with Real World Datasets using benchmark datasets for evaluation.

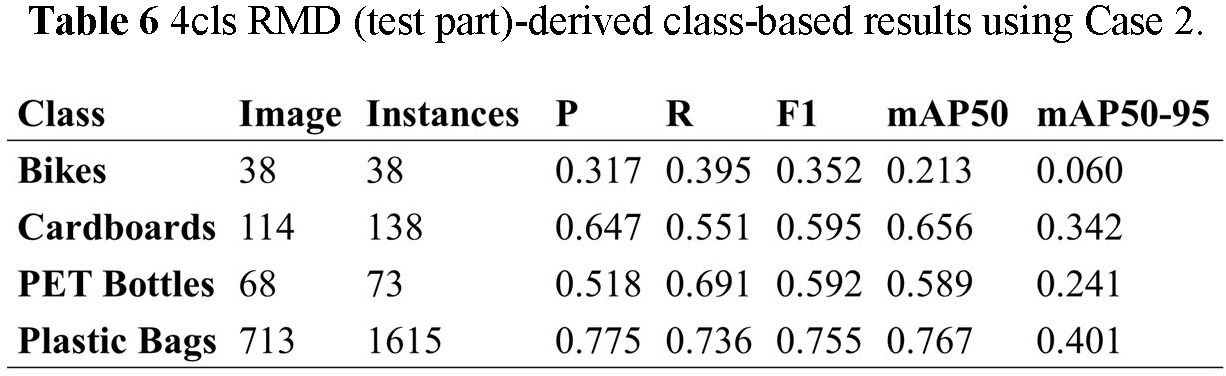

Performed the results of using 4cls RMD (test part) for testing in the Table 5, Case 3 showed the dominant high accuracy (i.e., F1 value) than Case 1 and 2 derived from AIGC. And Case 2 has improved from Case 1 because of using the Real World Dataset with background change. As shown in Table 6, because of the limited additional targets-based colors/shapes (i.e., Bikes), Bikes have not been detected with comparably low F1 value using Case 2.

On the other hand, the results in the Table 5 derived from UAV-PWD and UAV-BD indicate that the AIGC-based Dataset (i.e., Case 1, 2) showed superior accuracy in detecting waste pollution on the simple backgrounds (i.e., water area) compared to the Case 3. In the case of UAV-BD, even Case 2 has increased the data amount, Case 1 also outperformed both in Precision and Recall value. The increased background-change images in Case 2 have almost the same targets (i.e., cropped images including Bikes, Cardboards, Plastic Bags, PET Bottles), which reduced the F1 score of the trained model in detecting the targets with complex features (i.e., different colors, complicated shapes). Generally speaking, if the background of the test dataset is simple, more targets for training even similar could improve the F1 score. On the contrast side, the more complex features the targets of test datasets have, the more data with complex features need to be added to the training dataset.

In this study, to some content, using the AIGC can support (i.e., replacing or enhancing) the UAV-based Real World waste pollution detection tasks. Especially with the assistance of the Prompt Engineering, the images with specified targets can be generated with purposes. But there are also some limitations that cannot be solved yet. The pre-trained model for generating annotations for the AIGC is just one dataset with specified features, and the generated annotations are totally derived from the features of this dataset. Alternatively, if the pre-trained model changed, the generated annotations can also be an unstable factor for training a model derived from the AIGC.

In conclusion, without UAV flight and manual annotation for the train/valid dataset, the AIGC in this work can also apply in the riparian monitoring tasks of detecting the waste pollution in the simple backgrounds with a comparable high F1 value. The AIGC showed efficiency rather than UAV-or vehicle-based data collection process and also can reduce the burden of the professional civil engineering staff.

In the near future, the more detailed and accurate prompts that can increase the accuracy of detecting the targets in complex backgrounds are looking forward to being applied in practical riparian monitoring tasks.

Fig.10 has misclassified the rocks, concrete blocks, and electric wires protectors as wastes. This phenomenon has indicated the limitations of the AIGC-based Dataset, that if the non-waste targets with waste-similar-outlines in the test datasets have not been trained in the model, it is difficult for the trained model to separate the wastes and non-waste-targets. Based on the mentioned issues, in the future works, dataset supplements of the images with waste-similar-outlines are necessary.

Considering the possibility of improving the F1 value derived from the AIGC using a lower GSD value, 1.5 cm GSD 4cls RMD with detailed information has been utilized for confirmation. As shown in Table 7, waste pollution samples in 1.5 cm GSD 4cls RMD with 90° camera angle have been inferred by Case 1. Except for the Bikes class, all the other targets were detected with almost 1.0 F1 value using 0.45 IoU and 0.1 Confidence threshold. The reason of mis-detecting the Bikes is mainly based on the prompts. The results can be improved if prompts with more details are used.

As performed in Fig.11, although the Bike as a whole target has not been detected using the mentioned IoU and confidence threshold, the tire part has been seen with 0.3 Confidence. Based on this information, the Bike class can be considered to be annotated part by part to increase the accuracy, and if the IoU value can be changed from the default value used in this study (i.e., 0.45) to a lower value, the accuracy can also be improved. As shown in Fig. 12, all the plastic wastes have been detected, on the other hand, Fig. 13 performed several left-unnoticed wastes in the groups of 4_Land, 5_Step, 6_Mixture, 7_Ground, individually. Respondly, as displayed in the Table 8, F1 value of all the groups with left-unnoticed bottles are lower than 0.7. The wastes in all the natural or similar-natural background can be detected with comparatively high F1 value derived from the prompt in this study (i.e., riparian area). In the future, it is necessary to expand the scope of the prompts in the AIGC systematically for expanding the application.

This research was supported in part by the Electric Technology Research Foundation of Chugoku. 4cls RMD (train, vald and test part) were provided by Prof. Nishiyama from Okayama University.