2023 Volume 11 Issue 3 Pages 116-131

2023 Volume 11 Issue 3 Pages 116-131

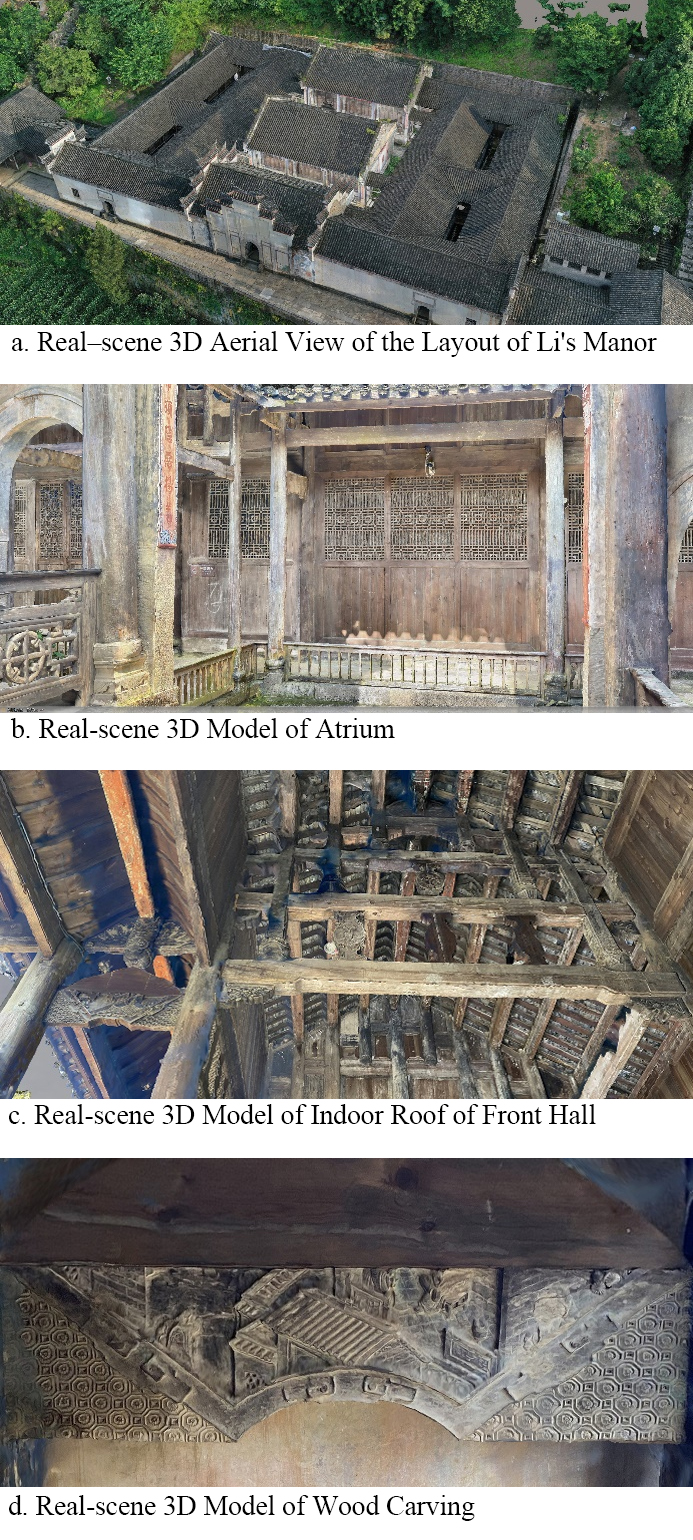

3D models of real-world scenes have become an important new tool in China, with widespread applications in cultural heritage preservation and restoration of ancient villages. However, due to the diverse and complex classical architectural forms and layout design in traditional Chinese gardens, the reconstruction of sophisticated digital models is challenging, and the integration of interior and exterior architectural models is unsatisfactory. In this paper, taking the architectural group model in Li's Manor, a major historical and cultural site protected at the national level in Lichuan City, Hubei Province, as an example, a spatial point cloud was generated by using oblique photography technology and virtual control points to accurately fuse multi-source image data, and a 3D model of indoor and outdoor real scenes of complex ancient buildings was constructed, to establish the entire real-scene 3D model of the manor and its garden. The technical solution can effectively fuse image data from different data sources and different resolutions organically, thus improving the precision and accuracy of the images and reducing blurring and distortion of the images. By fusing images from multiple sources, data duplication can be reduced, data storage can be reduced, and the efficiency of data utilisation and the accuracy of analysis results can be improved. With the approach, the cost of equipment use will be reduced, the efficiency of data acquisition will improve, and a reference for the reconstruction of complex digital models of ancient gardens can be provided.

In February 2022, the General Office of the Ministry of Natural Resources of the People's Republic of China issued the Notice on Comprehensively Promoting the Construction of Real-scene 3D China Zhao (2022). Real-scene 3D, as a real, three-dimensional and time-sequential spatial and temporal information reflecting human production, life, and ecological space, is an important new national infrastructure and provides a unified spatial positioning framework and analysis basis for Digital China. It is an important strategic data resource and production factor of digital government and the digital economy, and it has been included in the "14th Five-Year Plan" for the protection and utilization of natural resources (Zhao, 2022). Therefore, in the context of globalised urban competition, historical sites are potential sources for economic growth (Wang, Zhang et al., 2023). Digital processing of cultural relics is one of the ways to protect them. The historical buildings protection approach in the past mainly involved recording the two-dimensional information of traditional buildings with CAD, which was not easy to census and arrange (Bai, Zhang et al., 2016). Using traditional drawing methods heavily relies on personal experience and temporary judgment and cannot accurately depict specific components, especially in shaped and complex components with detailed spatial characteristics (Zhang, Ying et al., 2015). However, the fine detail of a 3D model can provide accurate basic data for historical research and cultural relic restoration (Han and Li, 2020).

Oblique photography is a three-dimensional reconstruction technology based on pictures. At present, many studies have examined the application of oblique photography. For example, Yu, B., Chen et al. (2017) elaborated on the real-scene 3D model reconstruction approach of large single cultural relics in the reconstruction of large immovable cultural relics and put forward the evaluation criteria of model accuracy and usability. Tan, Li et al. (2016) introduced an improved method for urban 3D modeling of UAV oblique photography and effectively combined oblique photography with MAX and other technologies for urban 3D modeling. Li, Xingyu, He et al. (2021) proposed technical methods of low-altitude oblique photography and real-scene 3D modelling for complex environmental conditions in plateau mountainous areas for complex environments.

Currently, there are three commonly used ways to reconstruct real-scene 3D models of ancient buildings, i) One is using LiDAR scanning equipment to obtain the point cloud data of the building and then generating a 3D model based on this (Maas, 1999), ii) The second is employing UAV low-altitude photography aerial survey to obtain the inclined image of the building and then to generate a real model. Cheng and Feng (2018) introduced the application methods and prospects of low-altitude oblique photography in landscape architecture in their application of low-altitude multi-rotor UAV aerial survey in field observation in the early stage of landscape architecture planning and design, and Yang, Yang, Tang et al. (2021) in their research on traditional settlement investigation and application prospects based on UAV low-altitude aerial photography and aerial survey technology, taking Laodongmen in Nanjing as an example. However, it is generally difficult to obtain complete images inside buildings, and too many images can easily cause failure in 3D modeling. Finally, iii) the third method is combining oblique photography and LiDAR scanning. For rockery and pond in the classical Chinese gardens, Yu, M. and Lin (2017) introduced in detail the process of using this method to generate 3D models of rocks, which is a reliable way to obtain fine detail 3D models at present.

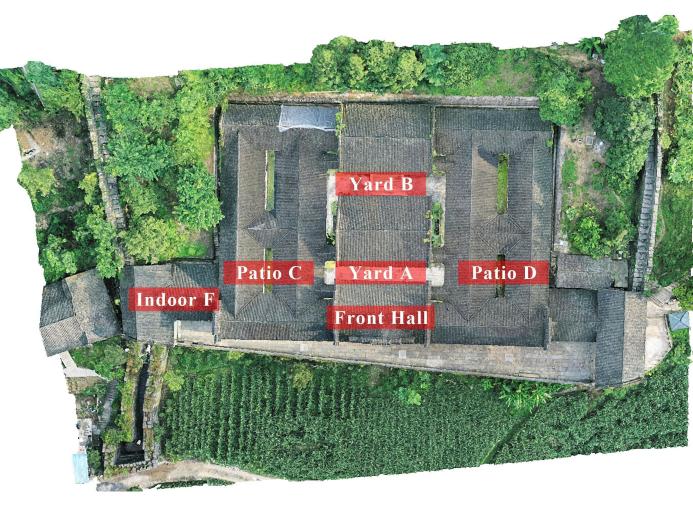

Since the second approach has the lowest equipment cost and is conducive to the popularity of this technical solution, this paper chooses to optimize on this basis. In order to support the construction of the information infrastructure of national key cultural and museum units and strengthen the construction of new infrastructure in the field of cultural relics. Therefore, this paper is an optimization based on the second method, focusing on Li's Manor in Lichuan City, Hubei Province, the Major Historical and Cultural Site Protected at the National Level. Li's Manor covers an area of about 8,000 square meters and has a complete set of buildings, characterized by southern wooden architecture. Generating a refined digital 3D model of Li's Manor is challenging, mainly due to the complex internal spatial composition, tight wooden structures, exquisite wood carvings, and complex patterns. This paper uses a variety of portable devices to fully capture building images and uses virtual control points to fuse multi-source images, solving the problem of poor data fusion effects indoors and outdoors, and further provide a feasible approach for future ancient garden and building reconstruction, and even the restoration of ancient villages.

There are many software programs that can generate real-scene 3D models through images. The currently popular software platforms include ContextCapture Center, Agisoft Metashape, and Pix4Dmapper (Yang, Yunfeng, Wei et al., 2020).

ContextCapture Center software can process multi-source photographic data from digital cameras, aerial photos, and LiDAR, and automatically and quickly generate multi-resolution real-scene 3D models of any size and accuracy (Bentley, 2021). With the help of 3D machine learning technology, the software can automatically detect, locate, and classify real-scene data. It can generate 3D models in various GIS formats and use them for design, construction, and operation workflows.

Agisoft Metashape can process images from RGB or multispectral cameras (including multi-camera systems) into high-value spatial information in the form of dense point clouds, textured polygon models, geo-referenced true mosaics, and digital surface or digital terrain models (DSMs/DTMs). The software system is designed to rely on machine learning techniques to provide industry-specific results for post-processing and analysis tasks. It can be applied in GIS applications, cultural heritage documentation, visual effects production, as well as indirect measurements of objects at various scales (Agisoft, 2023).

Pix4Dmapper is a professional aerial photogrammetry software. It can realize the automatic aerial survey function of UAVs and integrate fully automatic, fast, professional accuracy, and other functions to quickly generate an accurate report. It converts the original aerial images into digital orthomosaic or digital elevation model (DOM/DEM) data that can be read by any professional GIS software, and quickly produces professional and accurate 2D maps and 3D models (Pix4dmapper, 2023).

In the early stage of the project, the shadowless pagoda in Wuchang District, Wuhan City, was used as the basic experimental data, and the performance of the above software platforms was compared. It was concluded that Agisoft Metashape has the highest accuracy of aerial triangulation and image utilization, and the ContextCapture Center model has the highest generation accuracy and the best texture mapping quality. Therefore, Agisoft Metashape was used for aerial triangulation and calculation in the production of the real-scene 3D model of the buildings in Li's Manor, and then ContextCapture Center was employed to generate the grid model and texture mapping based on this.

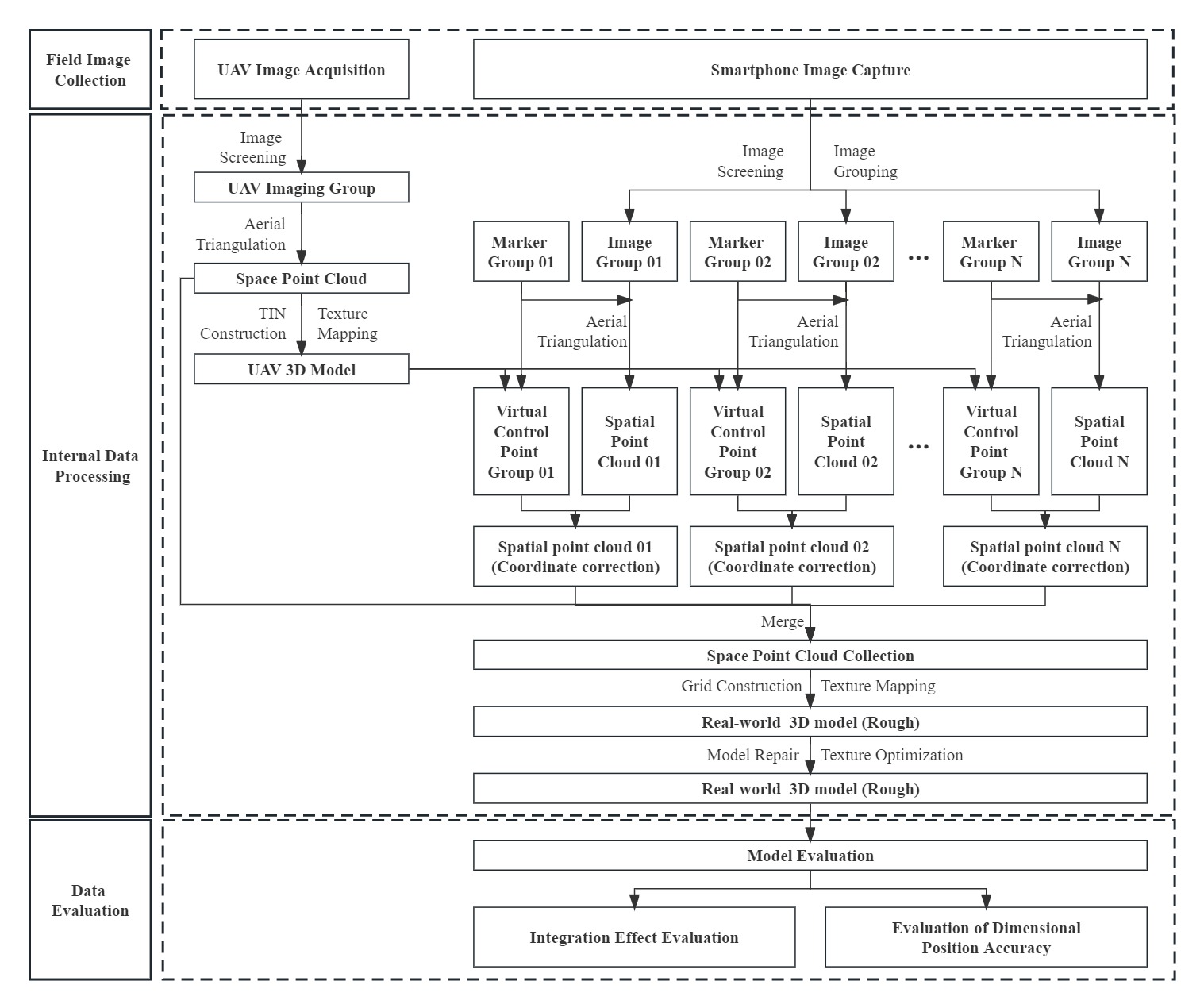

Technical Process and Data Acquisition for 3D Model Reconstruction Technical ProcessThe real-scene 3D model reconstruction of the buildings in Li's Manor was mainly conducted in three stages: field data collection, internal data processing, and data evaluation (as shown in Figure 1). Field image collection involved gathering detailed images and other data of objects using relevant equipment in the field. Internal data processing involved processing the collected basic image data to establish a real-scene 3D model. Data evaluation included assessing the built 3D model and judging its accuracy.

Field data collection is a crucial step in the entire 3D model reconstruction process, and the quality of the data directly determines the quality of the final results. The image acquisition tools mainly include DJI UAV Yu-2pro, Huawei Mate20, iPad Pro (11-inch), iPhone 11 Pro Max, iPhone 12, infrared rangefinder, fill light, and other devices. Outdoor low-altitude image acquisition is greatly affected by the weather, and the following principles were generally followed: i) Avoid collecting images in rainy, snowy, smoky, dusty conditions, etc.; ii) Collect images between 9:00 and 15:00 on cloudy days when the light is soft and bright; iii) Collect images two hours before sunset on sunny days. During this time, the sunlight gradually softens, and the shadow boundary of the building gradually weakens.

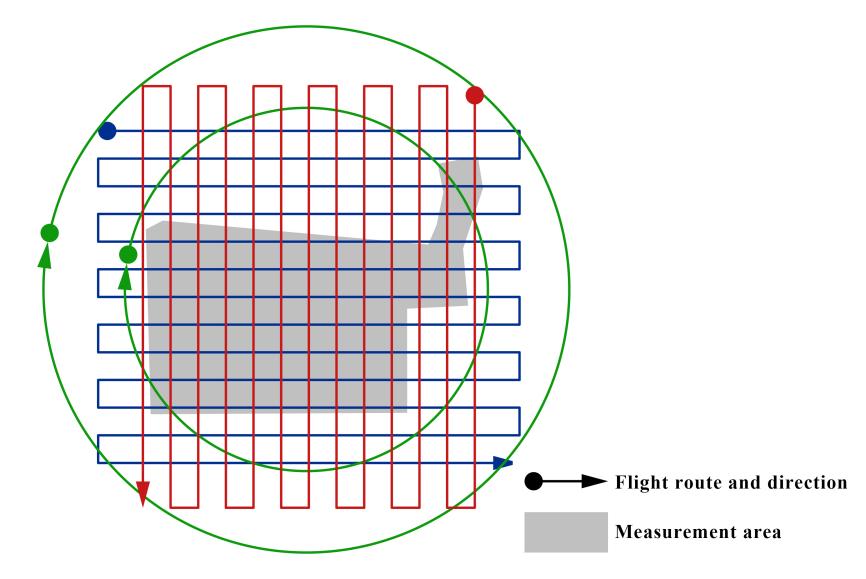

DJI UAV Yu-2pro was primarily used to capture images of building roofs, ground, and surroundings at a low altitude. Before aerial photography, it is necessary to define the photographic area and design the route. The photographic area is an area surrounded by the outer contour of the building extending outward by about 2m. The forward overlap of the route design is generally 65% to 75%, and the side overlap is generally 30% to 45%. However, for densely built areas, the overlap design should be appropriately increased (Li, Xilin, Li et al., 2022). The relative altitude and image accuracy of the route must meet the following formula:

Where D represents the shooting distance (m), P represents image accuracy (m/px), f indicates the lens focal length (mm), and W represents the maximum image width (px). L indicates the maximum width of the lens sensor (mm).

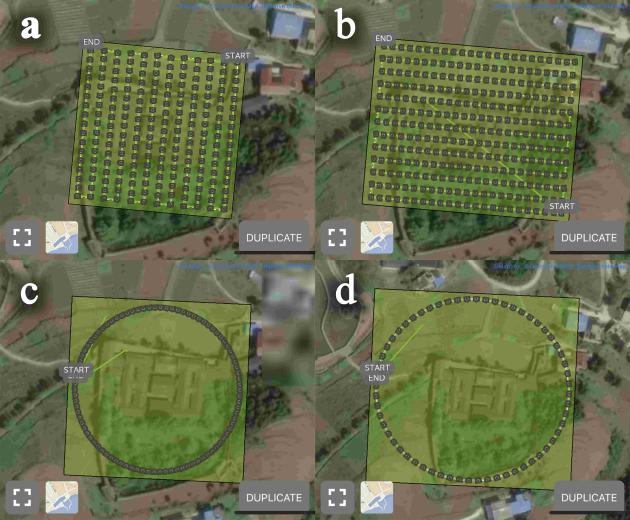

According to the relevant lens data, the Yu-2pro sensor of DJI UAV was 1 inch in size, with a maximum lens sensor width of 13.2mm and a maximum image width of 5,472px. As an example of a mobile phone device, Huawei Mate20 was used. Its sensor size was 1/1.73 inch, the maximum width of the lens sensor was about 7.4mm, and the maximum width of the image was 4608px. In this experiment, the required image accuracy was 0.005m/px. Therefore, based on the formula, the relative flight height of the UAV had to be about 21.7m, and Huawei Mate20 had to be about 7m away from the shooting subject. However, due to the complex environment around the site, the flight height of the UAV was set at 35m, considering flight safety. Although Agisoft Metashape can connect images of different accuracy for production, too large a difference in image accuracy can lead to distortion or failure of the produced images. Therefore, "interpolated images" were required for transition processing. The interpolated image was used to fill the gap between changes in image accuracy. The UAV route designed based on this is shown in Figure 2. The PIX4Dcapture geographic coordinate system (GCS) program was used to control the UAV flight route. The forward and side overlap of the rectangular grid flight paths in Figure 3a and Figure 3b are both 80%, and the angle between the lens and the horizontal is 45°. The photo clamping angles of the circular surrounds in Figure 3c and Figure 3d are 4° and 6°, respectively.

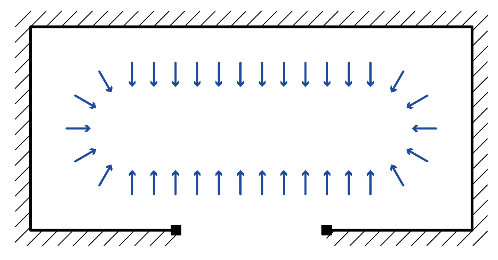

Other mobile devices were used to capture images of building yards, patios, and some interior spaces. The collection generally followed the following principles (Bentley, 2021): i) Ensure an overlap degree of above 60% between adjacent images generally not exceeding 90%; ii) Use lenses with fixed focal lengths and avoid digital zoom; iii) Use the same device to capture images in the same space; iv) Images should be clear, with moderate contrast and soft tones. The interior images of the building were captured as shown in Figure 4. All interior elevations needed to be covered. The images of the building’s patio and yards were captured as shown in Figure 5. Due to the limitation of spatial distance, multi-layer acquisition was required to ensure image accuracy during the transition. When using a handheld device to capture images, it was necessary to capture images from three angles at the same collection point due to the limited height. The specific operation mode is shown in Figure 6.

The total number of images collected from the building complex of Li’s Manor in this test was 6,973, including 963 images collected by the UAV and 6,010 images collected by handheld devices.

Note: The arrow indicates the shooting direction.

Note: The arrow indicates the shooting direction.

Based on the data collection described above, internal data processing is carried out to obtain a realistic 3D model. Internal data processing comprises four parts: image data pre-processing, aerial triangulation, virtual control point fusion, and 3D model generation.

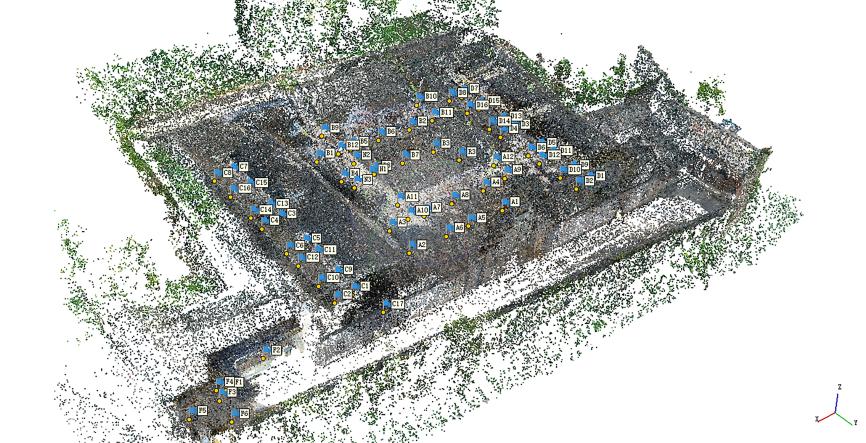

Image Data Pre-processingImage data pre-processing involves two main steps: data screening and data classification. Data screening aims to remove images that may reduce production efficiency or fail to meet production requirements, such as blurry images, over or underexposed images, excessive repetition, and colour distortion. Given the large number of photos, manual screening alone would be a burdensome task. Therefore, in this experiment, the image quality evaluation tool in Agisoft Metashape software was utilized to automatically assess image quality and assign a score ranging from 0 to 1. Images with a quality score below 0.5 were excluded. After quality evaluation, 5,734 images met the requirements. Data classification involved grouping images based on the specific area type within the building space, such as yards, patios, and indoor areas. The specific classification is shown in Figure 7. The corresponding number of images for each area is presented in Table 1.

| Area | Low altitude | Front Hall | Yard A | Yard B | Patio C | Patio D | Indoor F | Total |

|---|---|---|---|---|---|---|---|---|

| Quantity (sheet) | 836 | 165 | 1736 | 1539 | 493 | 737 | 228 | 5734 |

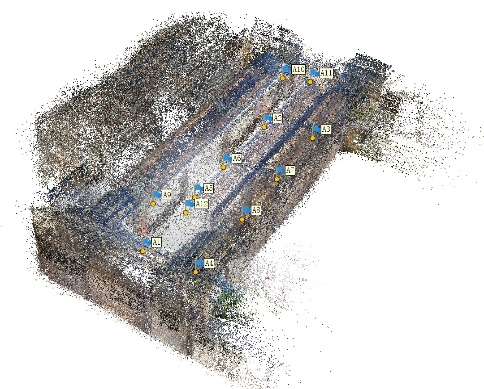

Aerial triangulation involves the relative orientation of all images to form a free network, and then the absolute orientation with ground control points to determine the position and attitude of each image (Duan, 2019). Based on the aforementioned grouping, the low-altitude group images directly generated sparse point clouds, as shown in Figure 8. Marker points were necessary for the other groups. Marker points served two purposes: firstly, to establish connections between images during aerial triangulation, thereby enhancing the accuracy of image connections and the quality of the generated results; secondly, to serve as connection points for point cloud fusion, and the selection of marker points directly influenced the quality of point cloud fusion. Therefore, the following principles were generally followed when adding marker points: i) Preference was given to building corner points as marker points to ensure their clear visibility in the image; ii) The points should be clearly and accurately represented in the 3D model generated from low-altitude images; iii) The minimum number of marker points should be 3, and they should not lie on the same straight line.

Taking the image of Yard A as an example, 12 marker points were added to the images of Yard A, and their positions and the resulting sparse point cloud position are shown in Figure 9. Other areas were treated in the same way as Yard A. The number and position of added markers were set according to the spatial complexity of each area.

Although the devices used for image acquisition were equipped with GPS, due to the large variety of devices used in this experiment, the positioning accuracy of different devices often differed greatly. This would lead to large differences in the spatial location of the sparse point clouds generated from images acquired by different devices in the same coordinate system. In order to reduce the error of spatial point cloud fusion, it is necessary to set a target point cloud position as a reference standard. Therefore, a point acquired on the target point cloud was defined as a "target point" and a point acquired on the point cloud to be adjusted was defined as a "marker point". The spatial position of the point cloud was corrected by adjusting the coordinate value of the marker point with the coordinate value of the target point. The virtual control point was generated by specifying and adding the coordinates of the target point to the marker point with the same name. The generation of virtual control points should follow the following principles: i) When extracting the coordinate values of the target points, they should be in the same coordinate system environment; ii) The naming rules of the coordinate values should be the same as those of the marked points. Taking Yard A as an example, the sparse point cloud at low altitude was first imported into ContextCapture Center software to generate a 3D model with default values, as shown in Figure 10. Then the coordinate values of the marker points of Yard A corresponding to the target point positions were extracted from the low-altitude model (Table 2). Finally, the coordinate values were imported into the marker points to generate the virtual control points, and the spatial position of the point cloud of Yard A was corrected.

| Target point | Longitude | Latitude | Altitude(m) |

|---|---|---|---|

| A1 | 109.0330440 | 30.5777087 | 955.258 |

| A2 | 109.0332087 | 30.5776968 | 955.260 |

| A3 | 109.0332051 | 30.5776612 | 955.993 |

| A4 | 109.0330396 | 30.5776731 | 955.936 |

| A5 | 109.0331072 | 30.5777045 | 955.287 |

| A6 | 109.0331463 | 30.5777019 | 955.304 |

| A7 | 109.0331469 | 30.5776652 | 955.959 |

| A8 | 109.0330975 | 30.5776685 | 955.953 |

| A9 | 109.0330423 | 30.5777012 | 959.896 |

| A10 | 109.0332084 | 30.5776887 | 959.989 |

| A11 | 109.0332062 | 30.5776695 | 960.548 |

| A12 | 109.0330396 | 30.5776808 | 960.534 |

After the coordinate correction was completed, the low-altitude point cloud and the point cloud of Yard A were fused. The fusion effect is shown in Figure 11, and the left figure shows the fusion effect before the correction of the coordinates of Yard A. The position of the point cloud is perpendicular to that of the low-altitude point cloud, which does not conform to the position in the real space. The right figure shows the fusion effect after coordinate correction. The positions of the two conform to the position in the real space and meet the production requirements. In the same way, the coordinates of buildings in other areas of Li's Manor were corrected and fused, and the final effect is shown in Figure 12.

According to the conclusions of the previous comparative test, ContextCapture Center is superior to similar software in processing model texture fineness. Therefore, the model generation process was completed using this platform. Firstly, the point cloud fusion result in Agisoft Metashape needed to be imported into ContextCapture Center as the initial data. Then, the "Target RAM Usage" value should be set according to the hardware of the computer, and the software would automatically split the model into multiple tiles. Finally, the production purpose for the 3D model with texture was selected. After a period of calculation, a complete real-scene 3D model can be obtained.

Real-scene 3D model of Li’s ManorDue to the compact and complex architectural structure of the buildings in Li's Manor, issues such as grid holes, deformation, and texture distortion would occur in local areas after the reconstruction of the real-scene 3D model was completed. The built-in repair tool of ContextCapture Center can quickly handle most of the model's problems. However, to fine-tune the model's mesh and texture, it is necessary to use third-party 3D modelling software, such as 3ds Max, C4D, Blender, and etc. The repaired real-scene 3D model is shown in Figure 13.

The real-scene 3D model of the buildings in Li's Manor, produced using multi-source image fusion technology, has high accuracy, and it can better generate the texture of the building walls and wood grain. In order to verify the dimensional accuracy of the 3D model, several representative areas were selected, and the actual dimensions of the buildings were measured and compared with the dimensions measured on the model. The obtained errors are shown in Table 3. It can be seen that the relative error accuracy of the reconstruction results ranges between 1:450 and 1:1200, which can better meet the needs of research on ancient architectural relics.

| Position | Actual measurement (mm) | Model measurement (mm) | Error (mm) | Relative error |

|---|---|---|---|---|

| Pedestal length of worship hall | 15860 | 15839 | 21 | 1:755 |

| Width of the steps at the front door of the worship hall | 5040 | 5047 | -7 | 1:720 |

| Length of pool | 3500 | 3503 | -3 | 1:1167 |

| Patio width | 2335 | 2340 | -5 | 1:467 |

| Width of main entrance of front hall | 1815 | 1812 | 3 | 1:605 |

In May 2022, the Opinions on Promoting the Implementation of the National Cultural Digitization Strategy issued by the General Office of the CPC Central Committee and the General Office of the State Council the General Office of the CPC Central Committee and Council (2022) clearly proposed that the achievements of digital projects and databases already built or under construction in the cultural field should be used as a whole to form a Chinese cultural database. Therefore, modern digital information technology can be used to comprehensively and scientifically record and preserve cultural heritage information, transform physical forms into digital information for storage, construct digital archives, and carry out permanent preservation and dynamic inheritance.

The digital collection of Li's Manor in Lichuan City, a national key cultural relics protection unit, is conducive to the restoration of local cultural relics, effectively solves the secondary damage caused by human factors, and improves the safety of cultural relics. Digital technology can digitize the preservation of cultural relics and buildings, maximizing the preservation of their information and providing a foundation for inheritance. Digital technology has achieved three-dimensional visualization of cultural relics and buildings, which helps researchers conduct more in-depth research.

For the 3D reconstruction of complex ancient buildings and the garden, this paper proposes an oblique photography approach that fuses images collected by multiple devices as the base data and summarizes the operating principles of different devices in the process of data acquisition. The technical flow reduces manual operations, lowers equipment performance requirements, and enhances the efficiency of image acquisition for 3D modelling. The model production process effectively and accurately fuses point clouds of different blocks using virtual control points, and the principles for selecting virtual control points were summarized. This enhanced the efficiency of 3D modelling and resulted in a highly refined real-scene 3D model of the buildings in Li's Manor.

In the application of ancient architecture surveying and mapping, oblique photography technology exhibits high efficiency and accuracy. However, it has limitations such as limited indoor image acquisition, restricted building shadows, and shooting angles. Currently, to address these limitations, it is necessary to combine oblique photography with other surveying and mapping technologies, such as laser scanning technology and panoramic cameras. This integration improves the accuracy and completeness of digital models.

Artificial intelligence (AI) is already being applied in the field of oblique photography. For example, automatic modelling algorithms can identify and analyse buildings in the data, enabling rapid generation of digital models. AI enables processing operations like automatic matching, cutting, and stitching of large amounts of image material, thereby significantly reducing processing time and improving processing efficiency. Furthermore, AI facilitates 3D modelling of buildings, weather monitoring, traffic flow analysis, and data extraction and analysis, contributing to a better understanding and utilisation of data (Xiong, 2019). In the future, AI technology can help address various challenges in oblique photography data processing and analysis, enhancing data accuracy and efficiency, and providing better services for digital architecture and cultural heritage preservation work. For our future research target, the combination of AI related technology with digital modelling method, can be a feasible way to improve the reconstruction process and data accuracy.

Conceptualization: C.Z. and XS.Y; methodology: C.Z. and XS.Y; field survey: D.Y.; result analysis: KL.D; data curation: J.M; writing: LJ.Z; supervision: XS.Y. All authors have read and agreed to the published version of the manuscript.

The authors declare that they have no conflicts of interest regarding the publication of the paper.

The study was supported by Key R&D Plan Projects in Hubei Province, Grant No. 2020BAB119.