2025 年 6 巻 1 号 p. 39-50

2025 年 6 巻 1 号 p. 39-50

Until now, with the assistance of advanced technologies like drones and artificial intelligence (AI), the worldwide-attention-gained riparian environmental monitoring tasks recently have been gradually solved. However, there are still practical tasks that need to be solved by researchers when they are using drones and AI: How the trained AI model can be tested in practice; What kind of recognition-size can help the researchers get higher object detection accuracy; What kind of background will affect the object detection accuracy. Based on the mentioned issues, even there are several waste-based datasets that can be applied as benchmark globally (i.e., UAVVaste, UAV-BD and MJU-Waste), as yet in Japan, there is still no systematic local benchmark riparian waste-based dataset for application. Derived from the above, this paper takes the local location, the Asahi River Basin, Japan as the study site to design the experiments, and centers on the task of UAV-derived riparian waste-based object detection. The authors set up topics related to the influence of ground sample distance (GSD) and backgrounds (land-cover) on the accuracy of riparian waste-based object detection through various kinds of riparian waste-based images collected at different UAV flight altitudes (GSD) and at different sections (backgrounds) using multiple recognition sizes. In order to systematically analyze the above subjects, the authors set up different types of waste (can, cardboard, bottles and plastic bags), GSD (1.5, 2.0 and 2.5 cm/pixel), land-cover type (artificial- and natural- environment) and pixel-united recognition size (342×342, 900×600 and 5472×3648, pixel-unit) in corresponding groups. As another key point of this research, the authors applied AIGC-based model to detect the large-size objects in section-1 with over 80% detection ratio. This result proved the possibility of applying AIGC-based model in practical remote sensing tasks.

The riparian environmental monitoring tasks in the worldwide recently have been gradually solved with the assistance of drone- and AI-based technologies1), 2), 3). Among the mentioned tasks, waste pollution is becoming an environmental challenge that gains more and more attentions from citizens in the world. Whereas, even the environmental monitoring tasks are solved step by step, there are still some practical issues that need to be solved by researchers when they are using drones and AI (i.e., how to test model in practical task, how to choose image size for higher accuracy, how to affect accuracy under different background).

Based on the mentioned issues, using benchmark dataset is one of the most common approaches for the corresponding test and assessment. And accordingly there have been several open source datasets4), 5), 6) that can be applied as benchmark in the world (i.e., UAVVaste, UAV-BD and MJU-Waste), that include multiple backgrounds and waste-based species. But the content of the mentioned datasets is not derived from Japanese local environments. Even there are some local datasets1), 3) that have been applied in the practical issues based on different purposes, the local dataset is still too rare to include the factors all at once as follows, different types of waste-based objects, multiple backgrounds (land-cover types) and collected by low-altitude drones.

Considering of overcoming the gap between global- and local-based benchmark datasets in the environmental monitoring tasks, and based on the purpose of this research, a systematic local benchmark riparian waste-based dataset for application is necessary. According to the mentioned research objective, this work takes the Asahi River Basin, Okayama Prefecture, Japan as the study site, and focus on the task of detecting different flight- altitudes UAV-derived riparian waste-based objects under different backgrounds using multiple recognition sizes. And systematic analysis is derived from following content in corresponding groups:

• Different types of waste (bottles, cardboard, cans and plastic bags; small- and large-size objects);

• Ground Sample Distance or GSD (1.5, 2.0 and 2.5 cm/pix);

• Land-cover type (Paved Roads, Concrete, Structure, Brown-Grass and Green-Vegetation);

• Recognition-sizes (342×342, 900×600 and 5472×3648, pixel-unit).

After confirming the groups for analysis, as shown in Fig.1, a workflow was designed by authors to collect the benchmark dataset, that includes the process of collecting a low-altitude drone riparian waste-based dataset to assess the trained Artificial Intelligence Generative Content, AIGC-based model. There are mainly five steps in the workflow: Data collection, Data processing, 3 types of recognition- sizes-based groups, Trained models and Assessment, individually.

1. Data Collection

The authors set up the waste-based objects in the corresponding study site that were divided into 3 sections under different backgrounds (land-cover types) and applied the drone to collect the drone-photography. And in each section, drone will use three different flight height. In the case of waste-based objects, all the can- and bottle-based items are collected from local dustbin. In contrast, the plastic bags and cardboards are newly bought. Based on the local situation, a kind of yellow-colored pay-for-collecting bags was chosen as the supplement for the normal apparent plastic bags. After the experiments, all the mentioned waste-based objects have been collected back by the on-site operators.

2. Data processing

Selecting the images that includes waste-based objects within 3 sections under different backgrounds using 3 different flight height, individually. And the authors used on-line annotation website Roboflow7) to annotate the waste-based objects using one-label (waste) without considering the difference between the objects.

3. Recognition-size-based groups (342×342, 900×600 and 5472×3648)

After collecting the corresponding drone-photography, the authors should divide the raw data into three recognition-size-based groups (342×342, 900×600 and 5472×3648, pixel-unit), each group includes 3 sections, and each section contains 3 GSD patterns (1.5, 2.0 and 2.5 cm/pix).

4. Trained AIGC-based model

After processing the drone-photography, the authors used the benchmark dataset in this research for testing the trained models derived from the method in the reference2), i.e., AIGC-/AIGC+Real- based models. Fig.S1 & S2 showed the train batch samples and mAP50 results in training AIGC-/AIGC+Real-based models. Both of the trained AIGC-/AIGC+Real-based models used approximate 60000 instances (i.e., waste-derived bounding box numbers) for training, all the instances are annotated as “Waste”. The trained models were used to detect the waste-based objects in the individual drone-photography.

5. Assessment

As the step of validating the trained AIGC-based model, this research will use mAP508), i.e., the average precision calculated at 0.5 Intersection-over-Union considering only “easy” detections and detection ratio, i.e., the ratio of detected waste-based objects in total as the assessment index. As shown in Table S1, the model-related parameter setting are used in the device for assessment

As local waste-based object detection benchmark, Local Low-Altitudes Drone-based Riparian Waste Benchmark Dataset (LAD-RWB) provided a reasonable tool not just for the academic, but also can be a systematic reference (multiple background and flight height) for decision-makers and industry. And considering of the rare of the riparian-environment waste dataset, this research showed its significant.

(1) Study site

As shown in Fig.2 (upper-side), the authors centered the study site in the Gion area of the Asahi River, Okayama Prefecture, Japan. Fig.2 (middle-side) showed the area that was mainly chose for setting up the waste-based objects. And Fig.2 (down-side) showed the 3 individual sections with different land-cover types in Fig.2 (middle-side).

(2) Device

In this research, a drone (Mavic 2 Pro) produced by DJI was used for the data collection. This drone features the Hasselblad L1D-20C camera with 20 MP (million pixels) and 1-inch sensor, and the Hasselblad Natural Color Solution (HNCS) can ensure accurate color result.

(3) Field Work

Before the operation of flying the drone, the manual confirmation is necessary. As shown in Fig.3, while one of the operators should turn around the drone 360°, the camera sensor is able to observe the environment around the drone itself, and another operator can monitor the controller to confirm. And after the confirmation, the drone should be laid on the ground for the preparation of the flying operation.

As shown in Fig.4 (a), a panorama view depicted the on-site drone setting after the preparation, Fig.4 (b) showed the situation of the drone flying in the sky and Fig.4 (c) displayed the controller user interface that includes the satellite mapping and the corresponding drone flying route. During the flying process, as shown in Table 1, the operator will set up the flight height (GSD) as 60-, 80- and 100-m (approximate GSD 1.5, 2.0 and 2.5 cm/pixel), individually. Fig.5 showed the corresponding difference of 3 samples under different GSDs. And Fig.6 showed the drone-photography of 3 individual sections.

If possible, all the types of the waste-based objects should be laid under all the types of the backgrounds to ensure the verity of the benchmark dataset. Because of the strong wind, all the plastic bags have been included stones inside to keep them stable.

(4) Dataset

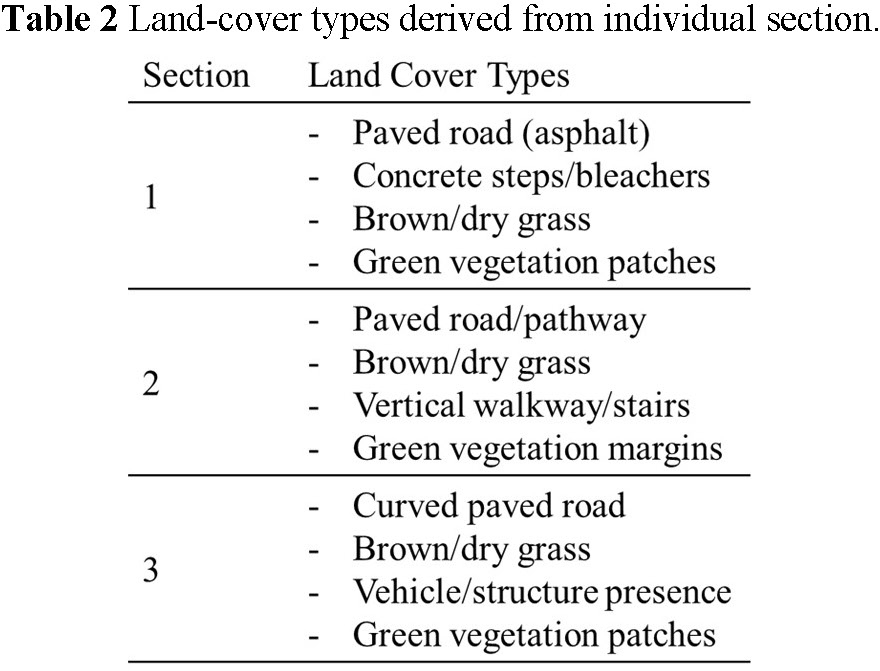

As shown in Table 2, there are several different land-cover types in individual section, includes but not limited to paved road, concrete steps/bleachers, vehicle walkway/structure, brown/dry grass and green vegetation patches. Based on the mentioned contents, the authors categorized the land-cover types derived from Table 2 into built/paved surfaces (artificial) or vegetation (natural). And Table 3 displayed the details of the categories as follows, asphalt, concrete, pathway, structure, brown-grass and green-vegetation. Fig.7 showed the drone-photography after waste-based objects set-up derived from different land-cover types, individually. As shown Table S2 & S3, the authors provided the details of the group-based image and annotation numbers, that includes different recognition-sizes, land-cover, sections, and GSD.

(5) Model

As the reference2), the authors applied the trained AIGC- and AIGC+Real- based model to detect the waste-based objects. The trained AIGC-based model used txt2img function in Stable Diffusion to generate the waste-based objects (includes cardboard, bottles and plastic bags) under the natural and artificial backgrounds that are similar to the land-cover styles in this research. The trained AIGC+Real-based model was based on the same generated dataset from AIGC-based model supplied with real objects and changed backgrounds.

(6) Objectives

This research aims to set up the waste-based objects under different artificial and natural backgrounds as the simulation of the remotely sensed riparian monitoring when facing with the practical waste pollutions. And these waste-based objects (i.e., plastic waste and cardboard) are chosen because they are found commonly in the riparian monitoring. The objectives of this research are shown mainly as follows:

1. Generating a systematic benchmark dataset with different types of waste, GSD, land-cover types and recognition sizes;

2. Assessing the AIGC-/AIGC+Real-based models when facing with the practical issues.

(1) GSD-based results derived from AIGC-based model

The results showcased in Fig.8 (a) that both trained AIGC- and AIGC+Real-based models have over 0.5 mAP50 value in detecting waste-based objects using 60- and 80- m flight height. The drone-photography derived from lower flight height have the advantages of showing more features comparing with higher flight height. But derived from other factors-based impacts (recognition-sizes and land-cover), there are some hard-to-detect samples collected from low flight height.

Based on the mentioned results, the authors recognized that only considering the lower flight height cannot guarantee the higher mAP50.

(2) Recognition-sizes-based results derived from AIGC-based models

Considering of the reasons that some mentioned samples are hard to be detected, feature gap between the recognition-sizes should be in consideration firstly. Even the flight height changed from 100 m to 60 m, it is also difficult to close the gap between the recognition-size 342×342 and 5472×3648. The changed comparative size (the ratio of waste-based object and recognition-size) is considered as the main reason. The results showcased in Fig.8 (b) that both trained AIGC- and AIGC+Real-based models have around 0.5 mAP50 value in detecting waste-based objects using 342×342, 900×600 and 5472×3648 recognition-sizes.

Based on the mentioned results, the authors recognized that all the recognition-sizes can be detected with over 0.5 mAP50. And the highest mAP50 values are derived from the comparative smaller recognition-sizes. After cross-referencing the GSD-based results, the GSD-/recognition-size pairs with over 0.5 mAP50 using both AIGC-/AIGC+Real-based models have been found as follows:

• 1. 60 m, 342×342 (section-1, -2 and -3)

• 2. 80 m, 342×342 (section-1, -2 and -3)

• 3. 80 m, 900×600 (section-1, -2 and -3)

• 4. 80 m, 5472×3648 (section-1)

Derived from the mentioned pairs, pair-4 can be used for large-scale searching, and pair-1~3 can be used for the object-based numbers confirmation.

(3) Land-cover-based results derived from AIGC-based model

As shown in Fig.8 (c), among all the land-cover types, asphalt and brown-grass have maximum and minimum mAP50 value, 0.8~0.9 and 0.1~0.2, individually. All the artificial background (asphalt, concrete, pathway and structure) and green-vegetation have the samples over 0.5 mAP50 value using both AIGC- and AIGC+Real-based models.

Considering of the reasons, brown-grass background is difficult for the waste-based object, especially yellow plastic bags and small-size objects to be detected. As shown in Fig.9, the authors used Gradient-weighted Class Activation Mapping, GradCam9) as the reference to provide local explanation for the waste-based objects under asphalt and brown-grass background. The values in Grad-Cam from low to high are using blue, yellow and red for performance, and red means more focus of the attention. As shown in Fig.9 brown-grass confidence value sections, there are several un-detected yellow plastic bags and small-size objects, in contrast, under asphalt background, all the waste-based objects have been detected except one small-size object.

Focusing more attention on the yellow plastic bag under asphalt and brown-grass background, individually, as shown in Fig.10, the authors separated the objects and backgrounds into cropped images for comparing the contrast value. And as shown in Table 4, mean/medium brightness vales of brown-grass sample between the waste-based object and background are not enough for extracting the features, comparing with waste-based object under asphalt background. Additionally, the reflection derived from the sunshine on the waste-based objects should also be in consideration as another reason. The timing of collecting the drone-photography need to be adjusted based on the difference and feature between different backgrounds, e.g., avoid from collecting data during too much sunshine.

(4) Waste-based results derived from AIGC- based model

Considering of the effect derived from brown-grass, the authors chose one location except brown-grass to discuss the difference among the waste-based objects. As shown in Fig.11, the trained AIGC model has detected 67 waste-based objects. And all the waste-based objects have been detected over 0.1 confidence value. As shown in Table 5, Large-size objects have been detected with comparative high confidence (around 0.5~0.8) with 82% detection ratio. On the other side, Plastic bags (yellow) have not been detected correctly. Considering of the reason, Plastic bags (yellow) are not common items in the worldwide, and it is not easy to use just “plastic bags” prompt in Stable Diffusion to generate the “yellow-colored plastic bags” images to train. Furthermore, the small-size objects are not easy to be detected, only detected with 35% detection ratio.

This study presented a novel dataset for waste-based object detection in the Asahi River Basin, Japan using a drone equipped with a 20 MP digital camera for data collection, the trained AIGC-/AIGC+Real-based models (YOLOv5) for data analysis, and collected dataset will be uploaded as a novel benchmark dataset.

After the systematic analysis, the authors found that the AIGC+Real-based model can improve the detection accuracy (mAP50) from AIGC-based model. Asphalt and brown-grass are the easiest and difficult background for both AIGC- and AIGC+Real-based models using the benchmark dataset in this research.

Considering of the systematic categories of flight height (GSD), recognition-sizes and backgrounds (land-cover), the benchmark dataset in this research is a reasonable tool for assessing drone-derived waste-based object detection models.

Fig.S1 showed the train batch samples in training AIGC- and AIGC+Real-based models, individually. AIGC-based model used 8 batch-size training with Stable-Diffusion-generated AIGC with around 13.8 GB GPU memory in approximate 15.5 hours, and AIGC+Real-based model used 16 batch-size with AIGC and Background-Changed Real World data with around 21 GB GPU memory in approximate 9 hours (batch-size set-up is derived from the calculation time and GPU memory).

Fig.S2 showed the mAP50 results and instances numbers in training AIGC- and AIGC+Real-based models, individually. Both models have similar number of the instances for training. Choosing similar numbers of the instances attempts to reduce the impact of the numbers of the instances on the comparison between AIGC and AIGC+Real-based models.

Table S1 showed the parameter setting of the computer using for training and assessment.

Table S2 showed the group-based image and annotation numbers, that includes different recognition-sizes, sections, and GSD (flight height).

Table S3 showed the group-based image and annotation numbers, that includes different land-cover, recognition-sizes, and GSD (flight height).

Table S2 & S3 provided systematic patterns, that flight height and recognition-sizes can be categorized derived from Table S2, and land-cover differences are mainly based on Table S3.

All the annotations in Table S2 & S3 are manually labelled after visualization-based confirmation. Even there are some objects existed in the image, but cannot be identified visually, the objects will still not be labelled.

his research was funded by the River Fund of the River Foundation, Japan, project number 2024-5211-067.