2017 Volume 93 Issue 3 Pages 99-124

2017 Volume 93 Issue 3 Pages 99-124

Coding technology is used in several information processing tasks. In particular, when noise during transmission disturbs communications, coding technology is employed to protect the information. However, there are two types of coding technology: coding in classical information theory and coding in quantum information theory. Although the physical media used to transmit information ultimately obey quantum mechanics, we need to choose the type of coding depending on the kind of information device, classical or quantum, that is being used. In both branches of information theory, there are many elegant theoretical results under the ideal assumption that an infinitely large system is available. In a realistic situation, we need to account for finite size effects. The present paper reviews finite size effects in classical and quantum information theory with respect to various topics, including applied aspects.

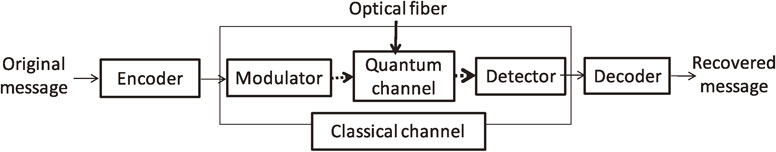

A fundamental problem in information processing is to transmit a message correctly via a noisy channel, where the noisy channel is mathematically described by a probabilistic relation between input and output symbols. To address this problem, we employ channel coding, which is composed of two parts: an encoder and a decoder. The key point of this technology is the addition of redundancy to the original message to protect it from corruption by the noise. The simplest channel coding is transmitting the same information three times as shown in Fig. 1. That is, when we need to send one bit of information, 0 or 1, we transmit three bits, 0, 0, 0 or 1, 1, 1. When an error occurs in only one of the three bits, we can easily recover the original bit. The conversion from 0 or 1 to 0, 0, 0 or 1, 1, 1 is called an encoder and the conversion from the noisy three bits to the original one bit is called a decoder. A pair of an encoder and a decoder is called a code.

Channel coding with three-bit code.

In this example, the code has a large redundancy and the range of correctable errors is limited. For example, if two bits are flipped during the transmission, we cannot recover the original message. For practical use, we need to improve on this code, that is, decrease the amount of redundancy and enlarge the range of correctable errors.

The reason for the large redundancy in the simple code described above is that the block-length (the number of bits in one block) of the code is only 3. In 1948, Shannon1) discovered that increasing the block-length n can improve the redundancy and the range of correctable errors. In particular, he clarified the minimum redundancy required to correct an error with probability almost 1 with an infinitely large block-length n. To discuss this problem, for a probability distribution P, he introduced the quantity H(P), which is called the (Shannon) entropy and expresses the uncertainty of the probability distribution P. He showed that we can recover the original message by a suitable code when the noise of each bit is independently generated subject to the probability distribution P, the rate of redundancy is the entropy H(P), and the block-length n is infinitely large. This fact is called the channel coding theorem. Under these conditions, the limit of the minimum error probability depends only on whether the rate of the redundancy is larger than the entropy H(P) or not.

We can consider a similar problem when the channel is given as additive white Gaussian noise. In this case, we cannot use the term redundancy because its meaning is not clear. In the following, instead of this term, we employ the transmission rate, which expresses the number of transmitted bits per one use of the channel, to characterize the speed of the transmission. In the case of an additive white Gaussian channel, the channel coding theorem is that the optimal transmission rate is $\frac{1}{2}\log (1 + \frac{S}{N})$, where $\frac{S}{N}$ is the signal-noise ratio [2, Theorem 7.4.4]. However, we cannot directly apply the channel coding theorem to actual information transmission because this theorem guarantees only the existence of a code with the above ideal performance. To construct a practical code, we need another type of theory, which is often called coding theory. Many practical codes have been proposed, depending on the strength of the noise in the channel, and have been used in real communication systems. However, although these codes realize a sufficiently small error probability, no code could attain the optimal transmission rate. Since the 1990s, turbo codes and low-density parity check (LDPC) codes have been actively studied as useful codes.3),4) It was theoretically shown that they can attain the optimal transmission rate when the block-length n goes to infinity. However, still no actually constructed code could attain the optimal transmission rate. Hence, many researchers have doubted what the real optimal transmission rate is. Here, we should emphasize that any actually constructed code has a finite block-length and will not necessarily attain the conventional asymptotic transmission rate.

On the other hand, in 1962, Strassen5) addressed this problem by discussing the coefficient with the order $\frac{1}{\sqrt{n} }$ of the transmission rate, which is called the second-order asymptotic theory. The calculation of the second-order coefficient approximately gives the solution of the above problem, that is, the real optimal transmission rate with finite block-length n. Although he derived the second-order coefficient for the discrete channel, he could not derive it for the additive white Gaussian channel. Also, in spite of the importance of his result, many researchers overlooked his result because his paper was written in German. Therefore, the successive researchers had to recover his result without use of his derivation. The present paper explains how this problem has been resolved even for additive white Gaussian channel by tracing the long history of classical and quantum information theory.

Currently, finite block-length theory is one of hottest topics in information theory and is discussed more precisely for various situations elsewhere.6)–17) Interestingly, in the study of finite-block-length theory, the formulation of quantum information theory becomes closer to that of classical information theory.18)

In addition to reliable information transmission, information theory studies data compression (source coding) and (secure) uniform random number generation. In these problems, we address a code with block-length n. When the information source is subject to the distribution P and the block-length n is infinitely large, the optimal conversion rate is H(P) in both problems. Finite-length analysis also plays an important role in secure information transmission. Typical secure information transmission methods are quantum cryptography and physical layer security. The aim of this paper is to review the finite-length analysis in these various topics in information theory. Further, finite-length analysis has been developed in conjunction with an unexpected effect from the theory of quantum information transmission, which is often called quantum information theory. Hence, we explain the relation between the finite-length analysis and quantum information theory.

The remained of the paper is organized as follows. First, Section II outlines the notation used in information theory. Then, Section III explains how the quantum situation is formulated as a preparation for later sections. Section IV reviews the idea of an information spectrum, which is a general method used in information theory. The information spectrum plays an important role for developing the finite-length analysis later. Section V discusses folklore source coding, which is the first application of finite-length analysis. Then, Section VI addresses quantum cryptography, which is the first application to an implementable communication system. After a discussion of quantum cryptography, Section VII deals with second-order channel coding, which gives a fundamental bound for finite-length of codes. Finally, Section VIII discusses the relation between finite-length analysis and physical layer security.

As a preparation for the following discussion, we provide the minimum mathematical basis for a discussion of information theory. To describe the uncertainty of a random variable X subject to the distribution PX on a finite set $\mathcal{X}$, Shannon introduced the Shannon entropy $H(P_{X}): = - \sum\nolimits_{x \in \mathcal{X}}P_{X} (x)\log P_{X}(x)$, which is often written as H(X). When −log PX(x) is regarded as a random variable, H(PX) can be regarded as its expectation under the distribution PX. When two distributions P and Q are given the entropy is concave, that is, λH(P) + (1 − λ)H(Q) ≤ H(λP + (1 − λ)Q) for 0 < λ < 1. Due to the concavity, the maximum of the entropy is $\log |\mathcal{X}|$, where $|\mathcal{X}|$ is the size of $\mathcal{X}$. To discuss the channel coding theorem, we need to consider the conditional distribution PY|X(y|x) = PY|X=x(y) where Y is a random variable in the finite set $\mathcal{Y}$, which describes the channel with input system $\mathcal{X}$ and output system $\mathcal{Y}$. In other words, the distribution of the value of the random variable Y depends on the value of the random variable X. In this case, we have the entropy H(PY|X=x) dependent on the input symbol $x \in \mathcal{X}$.

Now, we fix a distribution PX on the input system $\mathcal{X}$, taking the average of the entropy H(PY|X=x), we obtain the conditional entropy $\sum\nolimits_{x \in \mathcal{X}}P_{X} (x)H(P_{Y|X = x})$, which is often written as H(Y|X). That is, the conditional entropy H(Y|X) can be regarded as the uncertainty of the system $\mathcal{Y}$ when we know the value on $\mathcal{X}$. On the other hand, when we do not know the value on $\mathcal{X}$, the distribution PY on $\mathcal{Y}$ is given as $P_{Y}(y): = \sum\nolimits_{x \in \mathcal{X}}P_{X} (x)P_{Y|X = x}(y)$. Then, the uncertainty of the system $\mathcal{Y}$ is given as the entropy $H(Y): = H(P_{Y})$, which is larger than the conditional entropy H(Y|X) due to the concavity of the entropy. So, the difference H(Y) − H(Y|X) can be regarded as the amount of knowledge in the system $\mathcal{Y}$ when we know the value on the system $\mathcal{X}$. Hence, this value is called the mutual information between the two random variables X and Y, and is usually written as $I(X;Y)$. Here, however, we denote it by $I(P_{X},P_{Y|X})$ to emphasize the dependence on the distribution PX over the input system $\mathcal{X}$.

In channel coding, we usually employ the same channel PY|X repetitively and independently (n times). The whole channel is written as the conditional distribution

| \begin{equation*} P_{Y^{n}|X^{n} = x^{n}}(y^{n}): = P_{Y|X = x_{1}}(y_{1}) \cdots P_{Y|X = x_{n}}(y_{n}), \end{equation*} |

Under this formulation, we focus on the decoding error probability $\epsilon (E_{n},D_{n}): = \frac{1}{M_{n}}\sum\nolimits_{m = 1}^{M_{n}}(1 - \sum\nolimits_{y^{n}:D_{n}(y^{n}) = E_{n}(m)}P_{Y^{n}|X^{n} = E_{n}(m)} (y^{n})) $, which expresses the performance of a code $(E_{n},D_{n})$. As another measure of the performance of a code $(E_{n},D_{n})$, we focus on the size Mn, which is denoted by $|(E_{n},D_{n})|$ later. Now, we impose the condition $\epsilon (E_{n},D_{n}) \leq \epsilon $ on our code $(E_{n},D_{n})$, and maximize the size $|(E_{n},D_{n})|$. That is, we focus on $M(\epsilon |P_{Y^{n}|X^{n}}): = \max _{(E_{n},D_{n})}\{ |(E_{n},D_{n})|\mid \epsilon (E_{n},D_{n}) \leq \epsilon \} $. In this context, the quantity $\frac{1}{n}\log M(\epsilon |P_{Y^{n}|X^{n}})$ expresses the maximum transmission rate under the above conditions. The channel coding theorem characterizes the maximum transmission rate as follows.

| \begin{align} &\lim_{n\to\infty}\frac{1}{n}\log M(\epsilon|P_{Y^{n}|X^{n}}) \\ &\quad= \max_{P_{X}}I(P_{X},P_{Y|X}),\quad 0 < \epsilon < 1. \end{align} | [1] |

To characterize the mutual information, we introduce the relative entropy between two distributions P and Q as $D(P\| Q): = \sum\nolimits_{x \in \mathcal{X}}P (x)\log \frac{P(x)}{Q(x)}$. When we introduce the joint distribution $P_{XY}(x,y): = P_{X}(x)P_{Y|X}(y|x)$ and the product distribution $(P_{X} \times P_{Y})(x,y): = P_{X}(x)P_{Y}(y)$, the mutual information is characterized as1),2)

| \begin{align} I(P_{X},P_{Y|X}) &= D(P_{XY}\| P_{X} \times P_{Y}) \\ &= \min_{Q_{Y}}D(P_{XY}\| P_{X} \times Q_{Y}) \\ &= \min_{Q_{Y}}\sum_{x}P_{X}(x)D(P_{Y|X = x}\| Q_{Y}). \end{align} | [2] |

| \begin{align} &\max_{P_{X}}I(P_{X},P_{Y|X}) \\ &\quad= \max_{P_{X}}D(P_{X} \times P_{Y}\| P_{XY}) \\ &\quad= \max_{P_{X}}\min_{Q_{Y}}D(P_{X} \times Q_{Y}\| P_{XY}) \end{align} | [3] |

| \begin{align} &\quad= \max_{P_{X}}\min_{Q_{Y}}\sum_{x}P_{X}(x)D(P_{Y|X = x}\| Q_{Y}) \\ &\quad= \min_{Q_{Y}}\max_{P_{X}}\sum_{x}P_{X}(x)D(P_{Y|X = x}\| Q_{Y}). \end{align} | [4] |

On the other hand, it is known that the relative entropy D(P∥Q) characterizes the performance of statistical hypothesis testing when both hypotheses are given as distributions P and Q. Hence, we can expect an interesting relation between channel coding and statistical hypothesis testing.

As a typical channel, we focus on an additive channel. When the input and output systems $\mathcal{X}$ and $\mathcal{Y}$ are given as the module $\mathbb{Z}/d\mathbb{Z}$, given the input $X \in \mathbb{Z}/d\mathbb{Z}$, the output $Y \in \mathbb{Z}/d\mathbb{Z}$ is given as Y = X + Z, where Z is the random variable describing the noise and is subject to the distribution PZ on $\mathbb{Z}/d\mathbb{Z}$. Such a channel is called an additive channel or an additive noise channel. In this case, the conditional entropy H(Y|X) is H(PZ), because the entropy H(PY|X=x) equals H(PZ) for any input $x \in \mathcal{X}$, and the mutual information $I(P_{X},P_{Y|X})$ is given by H(PY) − H(PZ). When the input distribution PX is the uniform distribution, the output distribution PY is the uniform distribution and achieves the maximum entropy log d. So, the maximum mutual information $\max _{P_{X}}I(P_{X},P_{Y|X})$ is given as log d − H(PZ). That is, the maximum transmission equals log d − H(PZ). If we do not employ the coding, the transmission rate is log d. Hence, the entropy H(PZ) can be regarded as the loss of the transmission rate due to the coding. In this coding, we essentially add the redundancy H(PZ) in the encoding stage.

It is helpful to explain concrete constructions of codes with the case of d = 2, in which $\mathbb{Z}/2\mathbb{Z}$ becomes the finite field $\mathbb{F}_{2}$, which is the set $\{ 0,1\} $ with the operations of modular addition and multiplication, when the additive noise $Z^{n} = (Z_{1}, \ldots ,Z_{n})$ is subject to the n-fold distribution $P_{Z}^{n}$ of n independent and identical distributed copies of Z ∼ PZ. (From now on, we call such distributions “iid distributions” for short.) The possible transmissions are then elements of $\mathbb{F}_{2}^{n}$ which is the set of n-dimensional vectors whose entries are either 0 or 1. In this case, we can consider the inner product in the vector space $\mathbb{F}_{2}^{n}$ using the multiplicative and additive operations of $\mathbb{F}_{2}$. When PZ(1) = p, the entropy H(PZ) is written as h(p), where the binary entropy is defined as h(p) := −p log p − (1 − p)log (1 − p). Since $\mathcal{X}^{n} = \mathbb{F}_{2}^{n}$, we choose a subspace C of $\mathbb{F}_{2}^{n}$ with respect to addition and we identify the message set $\mathcal{M}_{n}$ with C. The encoder is given as a natural imbedding of C. To find a suitable decoder, for a given element [y] of the coset $\mathbb{F}_{2}^{n}/C$, we seek the most probable element Γ([y]) among x + C. Hence, when we receive $y \in \mathbb{F}_{2}^{n}$, we decode it to y − Γ([y]). It is typical to employ this kind of decoder. To identify the subspace C, we often employ a parity check matrix K, in which, the subspace C is given as the kernel of K. Using the parity check matrix K, the element of the coset $\mathbb{F}_{2}^{n}/C$ can be identified using the image of the parity check matrix K, which is called the syndrome. In this case, we denote the encoder by EK.

Alternatively, when Γ([y]) realizes $\max _{x^{n} \in [y]}P_{Z}^{n}(x^{n})$, the decoder is called the maximum likelihood decoder. This decoder also gives the minimum decoding error $\epsilon (E_{T},D)$. As another decoder, we can choose Γ([y]) such that Γ([y]) realizes $\max _{x^{n} \in [y]}|x^{n}|$, where |xn| is the number of appearances of 1 among n entries. This decoder is called the minimum distance decoder. When $P_{Z}(0) > P_{Z}(1)$, the maximum likelihood decoder is the same as the minimum distance decoder. We denote the minimum distance decoder by DK,min. This type of code is often called an error correcting code.

When most of the entries of the parity check matrix K are zero, the parity check matrix K is called an LDPC matrix. When the subspace C is given as the kernel of an LDPC matrix, the code is called the LDPC code. In this case, it is known that a good decoder can be realized with a small calculation complexity.3),4) Hence, an LDPC code is used for practical purposes.

To discuss the information transmission problem, we eventually need to address the properties of the physical media carrying the information. When we approach the ultimate limit of the information transmission rate as a theoretical problem, we need to consider the case when individual particles express each bit of information. That is, we focus on the information transmission rate under such an extreme situation. To realize the ultimate transmission rate, we need to use every photon (or every pulse) to describe one piece of information. Since the physical medium used to transmit the information behaves quantum mechanically under such conditions, the description of the information system needs to reflect this quantum nature.

Several researchers, such as Takahasi,19) started to consider the limit of optical communication in the 1960s. In 1967, Helstrom20),21) started to systematically formulate this problem as a new type of information processing system based on quantum theory instead of an information transmission system based on classical mechanical input and output, which obeys conventional probability theory. The study of information transmission based on such quantum media is called quantum information theory. In particular, research on channel coding for quantum media is called quantum channel coding. In contrast, information theory based on the conventional probability theory is called classical information theory when we need to distinguish it from quantum information theory, even when the devices employ quantum effects in their insides, because the input and the output are based on classical mechanics. Quantum information theory in its earlier stage has been studied more deeply by Holevo and is systematically summarized in his book22) in 1980.

Here, we point out that current optical communication systems are treated in the framework of classical information theory. However, optical communication can be treated in both classical and quantum information theory as follows (Figs. 2 and 3). Because the framework of classical information theory cannot deal with a quantum system, to consider optical communication within classical information theory, we need to fix the modulator converting the input signal to the input quantum state and the detector converting the output quantum state to the outcome, as shown in Fig. 2. Once we fix these, we have the conditional distribution connecting the input and output symbols, which describes the channel in the framework of classical information theory. That is, we can apply classical information theory to the classical channel. The encoder is the process converting the message (to be sent) to the input signal, and the decoder is the process recovering the message from the outcome.

Classical channel coding for optical communication. Dashed thick arrows indicate quantum state transmission. Normal thin arrows indicate classical information.

Quantum channel coding for optical communication. Dashed thick arrows indicate quantum state transmission. Normal thin arrows indicate classical information.

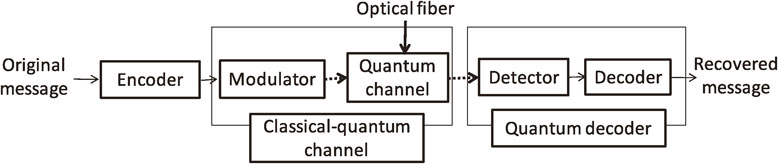

On the other hand, when we discuss optical communication within the framework of quantum information theory as shown in Fig. 3, we focus on the quantum channel, whose input and output are given as quantum states. When the quantum system is characterized by the Hilbert space $\mathcal{H}$, a quantum state is given as a density matrix ρ on $\mathcal{H}$, which is a positive-semi definite matrix with trace 1. Within this framework, we combine a classical encoder and a modulator into a quantum encoder, in which the message is directly converted to the input quantum state. Similarly, we combine a classical encoder and a detector into a quantum decoder, in which the message is directly recovered from the output quantum state. Once the optical communication is treated in the framework of quantum information theory, our coding operation is given as the combination of a quantum encoder and a quantum decoder. This framework allows us to employ physical processes across multiple pulses as a quantum encoder or decoder, so quantum information theory clarifies how much such a correlating operation enhances the information transmission speed. It is also possible to fix only the modulator and discuss the combination of a classical encoder and a quantum decoder, which is called classical-quantum channel coding, as shown in Fig. 4. A classical-quantum channel is given as a map from an element x of the input classical system $\mathcal{X}$ to an output quantum state ρx, which is given as a density matrix on the output quantum system $\mathcal{H}$.

Classical-quantum channel coding for optical communication. Dashed thick arrows indicate quantum state transmission. Normal thin arrows indicate classical information.

Here, we remark that the framework of quantum information theory mathematically contains the framework of classical information theory as the commutative special case, that is, the case when all ρx commute with each other. This character is in contrast to the fact that a quantum Turing machine does not contain the conventional Turing machine as the commutative special case. Hence, when we obtain a novel result in quantum information theory and it is still novel even in the commutative special case, it is automatically novel in classical information theory. This is a major advantage and became a driving force for later unexpected theoretical developments.

A remarkable achievement of the early stage was made by Holevo in 1979, who obtained a partial result for the classical-quantum channel coding theorem.23),24) However, this research direction entered a period of stagnation in the 1980s. In the 1990s, quantum information theory entered a new phase and was studied from a new viewpoint. For example, Schumacher introduced the concept of a typical sequence in a quantum system.25) This idea brought us new developments and enabled us to extend data compression to the quantum setting.25) Based on this idea, Holevo26) and Schumacher and Westmoreland27) independently proved the classical-quantum channel coding theorem, which had been unsolved until that time.

Unfortunately, a quantum operation in the framework of quantum information theory is not necessarily available with the current technology. Hence, these achievements remain more theoretical than classical channel coding theorem. However, such theoretical results have, in a sense, brought us more practical results, as we shall see later.

Now, we give a formal statement of the quantum channel coding theorem for the classical-quantum channel $x \mapsto \rho _{x}$. For this purpose, we introduce the von Neumann entropy H(ρ) := −Tr ρ log ρ for a given density matrix ρ. It is known that the von Neumann entropy is also concave just as in the classical case. When we employ the same classical-quantum channel n times, the total classical-quantum channel is given as a map $x^{n}( \in \mathcal{X}^{n}) \mapsto \rho _{x^{n}}^{(n)}: = \rho _{x_{1}} \otimes \cdots \otimes \rho _{x_{n}}$. While an encoder is given as the same way as the classical case, a decoder is defined in a different way because it is given by using a quantum measurement on the output quantum system $\mathcal{H}$. The most general description of a quantum measurement on the output quantum system $\mathcal{H}$ is given by using a positive operator-valued measure $D_{n} = \{ \Pi _{m}\} _{m = 1}^{M_{n}}$, in which, each Πm is a positive-semi definite matrix on $\mathcal{H}$ and the condition $\sum\nolimits_{m = 1}^{M_{n}}\Pi _{m} = I$ holds. As explained in [35, (4.7)][115, (8.48)], the decoding error probability is given as $\epsilon (E_{n},D_{n}): = \frac{1}{M_{n}}\sum\nolimits_{m = 1}^{M_{n}}(1 - \text{Tr}\,\Pi _{m}\rho _{E_{n}(m)}^{(n)}) $. So, we can define the maximum transmission size $M(\epsilon |\rho _{\cdot }^{(n)}): = \max _{(E_{n},D_{n})}\{ |(E_{n},D_{n})|\mid \epsilon (E_{n},D_{n}) \leq \epsilon \} $. On the other hand, the mutual information is defined as $I(P_{X},\rho _{\cdot }): = H(\sum\nolimits_{x}P_{X} (x)\rho _{x}) - \sum\nolimits_{x}P_{X} (x)H(\rho _{x})$. So, the maximum transmission rate is characterized by the quantum channel coding theorem as follows

| \begin{equation} \lim_{n\to\infty}\frac{1}{n}\log M(\epsilon|\rho_{\cdot}^{(n)}) = \max_{P_{X}}I(P_{X},\rho_{\cdot}),\quad 0 < \epsilon < 1. \end{equation} | [5] |

To characterize the mutual information $I(P_{X},\rho _{ \cdot })$, we denote the classical system $\mathcal{X}$ by using the quantum system $\mathcal{H}_{X}$ spanned by |x⟩ and introduce the density matrix $\rho _{XY}: = \sum\nolimits_{x \in \mathcal{X}}P_{X} (x)|x\rangle \langle x| \otimes \rho _{x}$ on the joint system $H_{X} \otimes \mathcal{H}$ and the density matrix $\rho _{Y}: = \sum\nolimits_{x \in \mathcal{X}}P_{X} (x)\rho _{x}$ on the quantum system $\mathcal{H}$. In this notation, we regard PX as the density matrix $\sum\nolimits_{x \in \mathcal{X}}P_{X} (x)|x\rangle \langle x|$ on $\mathcal{H}_{X}$. Using the quantum relative entropy D(ρ∥σ) := Tr ρ(log ρ − log σ) between two density matrices ρ and σ, the mutual information is written as

| \begin{align} I(P_{X},\rho_{\cdot}) &= D(P_{X} \otimes \rho_{Y}\|\rho_{XY}) \\ &= \min_{\sigma_{Y}}D(P_{X}\otimes\sigma_{Y}\|\rho_{XY}). \end{align} | [6] |

| \begin{align} \max_{P_{X}}I(P_{X},\rho_{\cdot}):= {}&\max_{P_{X}}D(P_{X} \otimes \rho_{Y}\|\rho_{XY}) \\ = {}&\max_{P_{X}}\min_{\sigma_{Y}}D(P_{X} \otimes \sigma_{Y}\|\rho_{XY}). \end{align} | [7] |

Here, it is necessary to discuss the relation between classical and quantum information theory. For this purpose, we focus on information transmission via communication on an optical fiber. When we employ coding in classical information theory, we choose a code based on classical information devices, which are the input and the output of the classical channel shown in Fig. 2. In contrast, when we employ coding in quantum information theory, we choose a code based on quantum information devices, which are the input and the output of the quantum channel shown in Fig. 3. In the case of Fig. 4, we address the classical-quantum channel so that we focus on the output system as a quantum information device. That is, the choice between classical and quantum information theory is determined by the choice of a classical or quantum information device, respectively.

The early stage of the development of finite block-length studies started from a completely different motivation and used the information spectrum method introduced by Han and Verdú.28),31) Conventional studies in information theory usually impose the iid or memoryless condition on the information source or the channel. However, neither the information source nor the channel is usually independent in the actual case and they often have correlations. Hence, information theory needed to be adapted for such a situation.

To resolve such a problem, Verdú and Han have discussed optimal performance in the context of several topics in classical information theory, including channel coding, by using the behavior of the logarithmic likelihood, as shown in Fig. 5.30) However, they have discussed only the case when the block-length n approaches infinity, and have not studied the case with finite block-length. It is notable that this study clarified that the analysis of the iid case can be reduced to the law of large numbers. In this way, the information spectrum method has clarified the mathematical structures of many topics in information theory, which has worked as a silent trigger for further developments.

Structure of information spectrum: The information spectrum method discusses the problem in steps. One is the step to connect the information source and the behavior of the logarithmic likelihood. The other is the step to connect the behavior of the logarithmic likelihood and the optimal performances in the respective tasks.

Another important contribution of the information spectrum method the connection of simple statistical hypothesis testing to many topics in classical information theory.31) Here, simple statistical hypothesis testing is the problem of deciding which candidate is the true distribution with an asymmetric treatment of two kinds of errors when two candidates for the true distribution are given. In particular, the information spectrum method has revealed that the performances of data compression and uniform random number generation are given by the behavior of the logarithmic likelihood.

Here, we briefly discuss the idea of the information spectrum approach in the case of uniform random number generation. Let $\mathcal{X}_{n}$ be the original system, where n is an index. The product set $\mathcal{X}^{n}$ is a typical example of this notation. In uniform random number generation, we prepare another set $\mathcal{Y}_{n}$, in which, we generate an approximate uniform random number Yn. In this formulation, we focus on the initial distribution $P_{X_{n}}$ on $\mathcal{X}_{n}$. Then, our operation is given as a map ϕn from $\mathcal{X}_{n}$ to $\mathcal{Y}_{n}$. The resultant distribution on $\mathcal{Y}_{n}$ is given as $P_{X_{n}} \circ \phi _{n}^{ - 1}(y): = \sum\nolimits_{x:\phi _{n}(x) = y}P_{X_{n}} (x)$. To discuss the quality of the resultant uniform random number, we employ the uniform distribution $P_{\mathcal{Y}_{n},\text{mix}}(y): = \frac{1}{|\mathcal{Y}_{n}|}$ on $\mathcal{Y}_{n}$. So, the error of the operation ϕn is given as $\gamma (\phi _{n}): = \frac{1}{2}\sum\nolimits_{y \in \mathcal{Y}_{n}}| P_{X_{n}} \circ \phi _{n}^{ - 1}(y) - P_{\mathcal{Y}_{n},\text{mix}}(y)|$. Now, we define the maximum size of the uniform random number with error ϵ as $S_{n}(\epsilon |P_{X_{n}}): = \max _{\phi _{n}}\{ |\mathcal{Y}_{n}|\,|\gamma (\phi _{n}) \leq \epsilon \} $. Vembu and Verdú [29, Section V] showed that

| \begin{align} &{\lim_{n \to \infty}\frac{1}{n}\log S_{n}(\epsilon |P_{X_{n}})} \\ &{\quad= \sup_{R}\left\{R|{\lim_{n \to \infty}}P_{X_{n}}\left\{x \in \mathcal{X}_{n}\biggm| - \frac{1}{n}\log P_{X_{n}}(x) \leq R\right\} \leq \epsilon\right\}.} \end{align} | [8] |

In the channel coding case, we focus on a general conditional distribution $P_{Y_{n}|X_{n}}(y|x)$ as the channel. Then, Verdú and Han30) derived the maximum transmission rate as

| \begin{align} &\lim_{n \to \infty}\frac{1}{n}\log M(\epsilon|P_{Y_{n}|X_{n}}) \\ &\quad= \sup_{\{P_{X_{n}}\}}\sup_{R}\biggl\{R\biggm|{\lim_{n \to \infty}}P_{X_{n},Y_{n}}\biggl\{(x,y) \in \mathcal{X}_{n} \\ &\qquad\times \mathcal{Y}_{n}\biggm|\frac{1}{n}\log\frac{P_{Y_{n}|X_{n}}(y|x)}{P_{Y_{n}}(y)}\leq R\biggr\}\leq \epsilon\biggr\}. \end{align} | [9] |

| \begin{align} &\lim_{n \to \infty}\frac{1}{n}\log M(\epsilon|P_{Y_{n}|X_{n}}) \\ &\quad= \sup_{R}\biggl\{R\biggm|{\lim_{n \to \infty}}P_{Z_{n}}\biggl\{z\in \mathcal{Z}_{n}\biggm|\frac{1}{n}(\log P_{Z_{n}}(z) \\ &\qquad+ \log|\mathcal{Z}_{n}|) \leq R\biggr\}\leq \epsilon\biggr\}. \end{align} | [10] |

As already mentioned, the information spectrum approach was started as a result of a motivation different from the above. When Han and Verdú28) introduced this method, they considered identification codes, which were initially introduced by Ahlswede and Dueck.137) To resolve this problem, Han and Verdú introduced another problem — channel resolvability — which discusses the approximation of a given output distribution by the input uniform distribution on a small subset. That is, they consider

| \begin{equation} T(\epsilon |P_{Y_{n}|X_{n}}): = \max_{P_{X_{n}}}T(\epsilon|P_{X_{n}},P_{Y_{n}|X_{n}}), \end{equation} | [11] |

| \begin{align} &T(\epsilon |P_{X_{n}},P_{Y_{n}|X_{n}})\\ &\quad := \min_{\mathcal{T}_{n}}\min_{\phi_{n}}\Biggl\{|\mathcal{T}_{n}|\Biggl|\frac{1}{2}\sum_{y \in \mathcal{Y}_{n}}\biggm|\sum_{x \in \mathcal{X}_{n}}P_{Y_{n}|X_{n}}(y|x)P_{X_{n}}(x) \\ &\qquad- \sum_{x \in \mathcal{X}_{n}}P_{Y_{n}|X_{n}}(y|x)\sum_{u:\phi_{n}(u) = x}P_{\mathcal{T}_{n},\text{mix}}(x)\Biggr|\leq \epsilon\Biggr\}, \end{align} | [12] |

| \begin{align} &\lim_{\epsilon\to 0}\lim_{n \to \infty}\frac{1}{n}\log T(\epsilon|P_{Y_{n}|X_{n}})\\ &\quad=\lim_{\epsilon \to 0}\sup_{\{P_{X_{n}}\}}\sup_{R}\biggl\{R\biggm|{\lim_{n \to \infty}}P_{X_{n},Y_{n}}\biggl\{(x,y) \in \mathcal{X}_{n} \\ &\qquad\times \mathcal{Y}_{n}\biggm|\frac{1}{n}\log\frac{P_{Y_{n}|X_{n}}(y|x)}{P_{Y_{n}}(y)} \leq R\biggr\}\leq \epsilon\biggr\} . \end{align} | [13] |

In the next stage, Nagaoka and the author extended the information spectrum method to the quantum case.32),33) In this extension, their contribution is not only the non-commutative extension but also the redevelopment of information theory. In particular, they have given a deeper clarification of the explicit relation between simple statistical hypothesis testing and channel coding, which is called the dependence test bound in the later study [33, Remark 15]. In this context, Nagaoka34) has developed another explicit relation between simple statistical hypothesis testing and channel coding, which is called the meta converse inequality1. These two clarifications of the relation between simple statistical hypothesis testing and channel coding work as a preparation for the next step of finite-length analysis.

Now, to grasp the essence of these contributions, we revisit the classical setting because the quantum situation is more complicated. To explain the notation of classical hypothesis testing, we consider testing between two distributions P1 and P0 on the same system $\mathcal{X}$. Generally, our testing method is written by using a function T from $\mathcal{X}$ to $[0,1]$ as follows. When we observe $x \in \mathcal{X}$, we support P1 with the probability T(x), and support P0 with the probability 1 − T(x). When the function T takes values only in $\{ 0,1\} $, our decision is deterministic. In this problem, we have two types of error probability. The first one is the probability for erroneously supporting P1 while the true distribution is P0, which is given as $\alpha (T|P_{0}\| P_{1}): = \sum\nolimits_{x \in \mathcal{X}}T (x)P_{0}(x)$. The second one is the probability for erroneously supporting P0 while the true distribution is P1, which is given as $\beta (T|P_{0}\| P_{1}): = \sum\nolimits_{x \in \mathcal{X}}(1 - T( x))P_{1}(x)$. Then, we consider the minimum second error probability under the constraint of a constant probability for the first error as $\beta (\epsilon |P_{0}\| P_{1}): = \min _{T}\{ \beta (T|P_{0}\| P_{1})|\alpha (T|P_{0}\| P_{1}) \leq \epsilon \} $.

To overcome the problem with respect to $\sup _{P_{X_{n}}}$ in [9], for a given channel PY|X, Nagaoka34) derived the meta converse inequality:

| \begin{equation} M(\epsilon |P_{Y|X}) \leq \max_{P_{X}}\beta (\epsilon |P_{XY}\| P_{X} \times Q_{Y})^{-1} \end{equation} | [14] |

Also, the author and Nagaoka derived the dependence test bound as follows [33, Remark 15]. For a given distribution on PX on $\mathcal{X}$ and a positive integer N, there exists a code $(E,D)$ such that $|(E,D)| = N$2

| \begin{equation} \epsilon (E,D) \leq \epsilon + N\beta (\epsilon |P_{XY}\| P_{X} \times P_{Y}). \end{equation} | [15] |

| \begin{equation} M(\epsilon + \delta |P_{Y|X}) \geq \max_{P_{X}}\delta \beta (\epsilon |P_{XY}\| P_{X} \times Q_{Y})^{ - 1}. \end{equation} | [16] |

Then, using [16], the author and Nagaoka derived the ≥ part of [9] including the quantum extension. Also, using [14], the author and Nagaoka derived another expression for [9]:

| \begin{align} &\lim_{n \to \infty}\frac{1}{n}\log M(\epsilon |P_{Y_{n}|X_{n}})\\ &\quad= \inf_{\{ Q_{Y_{n}}\}}\sup_{\{P_{X_{n}}\}}\sup_{R}\biggl\{R\biggm|{\lim_{n \to \infty}}P_{X_{n},Y_{n}}\biggl\{(x,y) \in \mathcal{X}_{n} \\ &\qquad\times \mathcal{Y}_{n}\biggm|\frac{1}{n}\log\frac{P_{Y_{n}|X_{n}}(y|x)}{Q_{Y_{n}}(y)} \leq R\biggr\}\leq \epsilon\biggr\}. \end{align} | [17] |

Indeed, since the classical case is not so complicated, it is possible to recover several important results from [9]. However, use of the formula [17] is needed in the quantum case because everything becomes more complicated.

When the information source is subject to the iid distribution $P_{X}^{n}$ of PX, the compression rate and the uniform random number generation rate have the same value of H(PX) asymptotically. Hence, we can expect that the data compressed up to the entropy rate H(PX) would be the uniform random number. However, this argument does not work as a proof of the statement, so this conjecture has the status of folklore in source coding, and its validity remained unconfirmed for a long time.

Han36) tackled this problem by using the method of information spectrum. Han focused on the normalized relative entropy $\frac{1}{n}D(P_{X}^{n} \circ \phi _{n}^{ - 1}\| P_{\mathcal{Z}_{n},\text{mix}})$ as the criterion to measure the difference of the generated random number from a uniform random number, and showed that the folklore in source coding is valid.36) However, the normalized relative entropy is too loose a criterion to guarantee the quality of the uniform random number because it is possible to distinguish a generated random number from a truly uniform random number even though the random number is considered to be uniform by this criterion. In particular, when a random number is used for cryptography, we need to employ a more rigorous criterion to judge the quality of its uniformity.

In contrast, the criterion γ(ϕn) is the most popular criterion which gives the statistical distinguishability between a truly uniform random number and a given random number.37) That is, when this criterion takes the value 0, the random number must be truly uniform. Hence, when we use a random number for cryptography, we need to accept only a random number passing this criterion. Also, Han36) has proved that the folklore conjecture in source coding is not valid when we adopt the variational distance as our criterion.

On the other hand, to clarify the incompatibility between data compression and uniform random number generation, the author8) developed a theory for finite-block-length codes for both topics. In this analysis, he applied the method of information spectrum to the second-order $\sqrt{n} $ term, as shown in Fig. 5. That is, by using the varentropy $V(P_{X}): = \sum\nolimits_{x \in \mathcal{X}}P_{X} (x)( - \log P_{X}(x) - H(P_{X}))^{2}$, the central limit theorem guarantees that

| \begin{align} &P_{X}^{n}\{x^{n} \in \mathcal{X}^{n}|(\log P_{X}^{n}(x^{n}) - nH(P_{X}))/\sqrt{n} \leq \epsilon\} \\ &\quad= \sqrt{V(P_{X})}\Phi^{-1}(\epsilon), \end{align} | [18] |

| \begin{align} &\log S(\epsilon |P_{X}^{n}) \\ &\quad = nH(P_{X}) + \sqrt{n} \sqrt{V(P_{X})} \Phi^{-1}(\epsilon) + o(\sqrt{n}). \end{align} | [19] |

Now, we consider data compression, in which we define the minimum compressed size $R(\epsilon |P_{X}^{n})$ with decoding error ϵ in the same way. Then, the asymptotic expansion is5),8)

| \begin{align} &\log R(\epsilon |P_{X}^{n}) \\ &\quad = nH(P_{X}) - \sqrt{n} \sqrt{V(P_{X})} \Phi^{ - 1}(\epsilon) + o(\sqrt{n}). \end{align} | [20] |

Asymptotic trade-off relation between errors of data compression and uniform random number generation: When we focus on the second-order coding rate, the minimum error of data compression is the probability of the exclusive event of the minimum error of uniform random number generation.

Now, we fix the conversion rate up to the second-order $\frac{1}{\sqrt{n} }$. When we apply an operation from the system $\mathcal{X}^{n}$ to a system with size $e^{nH(P_{X}) + \sqrt{n} R}$, the sum of the errors of the data compression and the uniform random number generation almost equals to 1. This trade-off relation shows that data compression and uniform random number generation are incompatible to each other. Indeed, since the task of data compression has the direction opposite to that of uniform random number generation, the second-order analysis explicitly clarifies that there is a trade-off relation for their errors rather than compatibility.

Although the evaluation of optimal performance up to the second-order coefficient gives an approximation of the finite-length analysis, it also shows the existence of their trade-off relation. This application shows the importance of the second-order analysis. Because the evaluation of the uniformity of a random number is closely related to security, this type evaluation has been applied to security analysis.38) This trade-off relation also plays an important role when we use the compressed data as the scramble random variable for another piece of information.39)

Section III has explained that the problem of the ultimate performance of optical communication can be treated as quantum channel coding. When the communication media has quantum properties, it opens the possibility of a new communication style that cannot be realized with the preceding technology. Quantum cryptography was proposed by Bennett and Brassard40) in 1984 as a technology to distribute secure keys by using quantum media. Even when the key is eavesdropped during the distribution, this method enables us to detect the existence of the eavesdropper with high probability. Hence, this method realizes secure key distribution, and is called quantum key distribution (QKD).

Now, we explain the original QKD protocol based on single-photon transmission. In the QKD, the sender, Alice, needs to generate four kinds of states in the two-dimensional system $\mathbb{C}^{2}$, namely, |0⟩, |1⟩, and $| \pm \rangle:= \frac{1}{\sqrt{2} }(|0\rangle \pm |1\rangle )$3. Here, $\{ |0\rangle ,|1\rangle \} $ is called the bit basis, and $\{ | \pm \rangle \} $ is called the phase basis. Also, the receiver, Bob, needs to measure the received quantum state by using either the bit basis or the phase basis.

The original QKD protocol40) is the following.

In this protocol, if the eavesdropper, Eve, performs a measurement during transmission, the quantum state would be destroyed with non-negligible probability because she does not know the basis of the transmitted quantum state a priori. When the number of qubits measured by Eve is not so small, Alice and Bob will find disagreements in step (4). So, the existence of eavesdropping will be discovered by Alice and Bob with high probability.

B. Random hash functions.The original protocol supposes noiseless quantum communication by a single photon. So, the raw keys are not necessarily secure when the channel has noise. To realize secure communication even with a noisy channel, we need a method to generate secure keys from keys partially leaked to Eve. Such a process is called privacy amplification. In this process, we apply a hash function, which maps from a larger set to a smaller set. In the security analysis, we often employ a hash function whose choice is determined by a random variable (a random hash function). A typical class of random hash functions is the following class. A random hash function fR from $\mathbb{F}_{2}^{n}$ to $\mathbb{F}_{2}^{m}$ is called universal264),65) when

| \begin{equation} \Pr\{f_{R}(x) = f_{R}(x')\} \leq 2^{-m} \end{equation} | [21] |

This class can be relaxed as follows. A random hash function fR from $\mathbb{F}_{2}^{n}$ to $\mathbb{F}_{2}^{m}$ is called δ-almost universal2 when

| \begin{equation} \Pr\{f_{R}(x) = f_{R}(x')\} \leq \delta 2^{-m} \end{equation} | [22] |

| \begin{equation} \Pr\{x \in \mathrm{Ker}\,f_{R}\} \leq \delta 2^{-m} \end{equation} | [23] |

| \begin{equation} \Pr\{x \in (\mathrm{Ker}\,f_{R})^{\bot}\} \leq \delta 2^{-n+m} \end{equation} | [24] |

Classes of (dual) universal2 hash functions and security: A hash function is used to realize privacy amplification. This picture shows the relations between classes of hash functions and security. In cryptography theory, strong security is considered a requirement for a hash function.37) The class of universal2 hash functions was proposed in.64),65) Using the leftover hash lemma,62),63) Renner66) proposed to use this class for quantum cryptography. Tomamichel et al.68) proposed to use the class of δ-almost universal2 hash functions when δ is close to 1. Tsurumaru et al.57) proposed the use of δ-almost dual universal2 hash functions when δ is constant or increases polynomially. As an example of a δ-almost dual universal2 hash function, the author with his collaborators56) constructed a secure Hash Function with a less random seed and less calculation. Although the security analysis in67) is based on universal2 hash functions, that in53)–55) is based on δ-almost dual universal2 hash functions.

When R is not a uniform random number the above concatenated Toeplitz matrix MR is not universal2; fortunately, it is δ-almost dual universal2. So, we can evaluate security in the framework of δ-almost dual universal2 hash functions. That is, for a realistic setting, the concept of δ-almost dual universal2 works well. Note that there exists a 2-almost universal2 hash function whose resultant random number is insecure (Fig. 7). Hence, the concept of δ-almost dual universal2 is more useful than δ-almost universal2.

C. Single-photon pulse with noise.To realize the security even with a noisy quantum channel, we need to modify the original QKD protocol. Since this modified protocol is related to error correction, finite-length analysis plays an important role to guarantee the security of the real QKD system. Here, for simplicity, we discuss only the finite-length security analysis with the Gaussian approximation.

The modified QKD protocol is the following. Steps (1), (2), and (3) are the same as in the original.

To perform the finite-length security analysis approximately, we consider the following items.

| \begin{equation} \text{E}_{R}\epsilon (E_{f_{R}},D_{f_{R},\text{min}}|P_{Z^{n}}) \leq \delta e^{nh((b - c)/n) - \bar{k}}, \end{equation} | [25] |

To give our security criterion, we denote the information transmitted via the public channel by u, and introduce its distribution Ppub. Depending on the public information u, we denote the state on the composite system of Alice’s and Eve’s systems, the state on Alice’s system, the state on Eve’s system, and the length of the final key length by ρA,E|u, ρA|u, ρE|u, and s(u), respectively. We denote the completely mixed state with length s(u) by ρA,mix|s(u). Then, similar to the security criterion is given in [53, (3)]

| \begin{equation} \frac{1}{2}\sum_{u}P_{\text{pub}}(u)\|\rho_{A,E|u} - \rho _{A,\text{mix}|s(u)} \otimes \rho_{E|u}\|_{1}. \end{equation} | [26] |

Combining the above four items, depending on c, we can derive the sacrifice bit length $\bar{k}(c)$. Although the exact formula of $\bar{k}(c)$ is complicated, it can be asymptotically expanded as [53, (53)]

| \begin{align} \bar{k}(c) &= nh\left(\frac{c}{l}\right) \\ &\quad - \frac{\sqrt{n}}{2}h'\left(\frac{c}{l}\right)\sqrt{\frac{c}{l}\left(1 - \frac{c}{l}\right)\left(1 + \frac{l}{n}\right)\frac{n}{l}} \Phi^{-1}\left(\frac{\epsilon^{2}}{2}\right) \\ &\quad+ o(\sqrt{n}). \end{align} | [27] |

Here, we should remark that this security analysis does not assume the memoryless condition for the quantum channel. To avoid this assumption, we introduce a random permutation and the effect of random sampling, which allows us to consider that the errors in both bases are subject to the hypergeometric distribution. However, due to the required property of hash functions, we do not need to apply the random permutation in the real protocol. That is, we need to apply only random sampling to estimate the error rates of the phase basis.

Here, we need to consider the reliability, that is, the agreement of the final keys. For this purpose, we need to attach a key verification step as follows [122, Section VIII].

However, the amount of leaked information for the final keys cannot be estimated by a similar method. So, the security analysis is more important than the agreement of the keys.

D. Weak coherent pulse with noise.Next, we discuss a weak coherent pulse with noise, whose device is illustrated in Fig. 8. Since the above protocol assumes single-photon pulses, when the pulse contains multiple photons even occasionally, the above protocol cannot guarantee security. Since it is quite difficult to generate a single-photon pulse, we usually employ weak coherent pulses with phase randomization, whose states are written as $\sum\nolimits_{n = 0}^{\infty }e^{ - \mu } \frac{\mu ^{n}}{n}|n\rangle \langle n|$, where μ is called the intensity. That is, weak coherent pulses contain multiple-photon pulses, as shown in Fig. 9. In this case, there are several multiple-photon pulses among n received pulses. In optical communication, only a small fraction of pulses arrive at the receiver side. That is, the ratio of multiple-photon states of Alice’s side is different from that of Bob’s side. This is because the detection ratio on Bob’s side depends on the number of photons.

QKD system developed by NEC. Copyright (2015) by NEC: This device was used for a long-term evaluation demonstration in 2015 by the “Cyber Security Factory” (core facility for counter-cyber-attack activities in NEC).58)

Multiple photons in a weak coherent pulse: A weak coherent pulse contains multiple photons with a certain probability, which depends on the intensity of the pulse.

As the first step in the security analysis, we need to estimate the ratios of vacuum pulses, single-photon pulses, and multiple-photon pulses among n received pulses. Indeed, there is a possibility that Bob erroneously detects the pulse even with a vacuum pulse. To obtain this estimate, we remark that the ratio of multiple-photon pulses depends on the intensity μ. Hence, it is possible to estimate the detection ratios of vacuum pulses, single-photon pulses, and multiple-photon at Bob’s side from the detection ratios of more than 3 different intensities, which are obtained by solving simultaneous equations.44)–50),113) Observing the error rate of each pulse depending on the intensity and the basis, we can estimate the error rates of both bases for vacuum pulses, single-photon pulses, and multiple-photons. This idea is called the decoy method. Based on this discussion, we change steps (1), (2), (3), and (4). However, we do not need to change steps (5) and (6), in which we choose the error correcting code and the sacrifice bit length.

As the second step of the security analysis, when n received pulses are composed of n0 vacuum pulses, n1 single-photon pulses, and n2 multiple-photon pulses, we need to estimate the leaked information after the privacy amplification with sacrifice bit length $\bar{k}$. In the current case, we replace items (i) and (iii) by the following.

| \begin{equation} \Gamma([y]): = \underset{{x^{n} \in [y]:(*)}}{\mathrm{argmin}}\| x^{n}\|, \end{equation} | [28] |

| \begin{equation} \text{E}_{R}\epsilon (E_{f_{R}},D_{f_{R},\text{min}}|P_{Z^{n}}) \leq \delta e^{n_{1}h(t_{1}/n_{1}) + n_{2} - \bar{k}}. \end{equation} | [29] |

Finally, we combine the original items (ii) and (iv) with the above modified items (i′) and (iii′). However, due to the complicated estimation process for the partition n0, n1, n2 of n qubits, we need a very complicated discussion. Based on such an analysis, after long calculation, we obtain a formula for the sacrifice bit length, as shown in Fig. 10.

Key generation rate with weak coherent pulses: We employ two intensities: signal intensity and decoy intensity. Using the difference between detection rates of the pulses with two different intensities, we can estimate the fraction of multiple photons in the detected pulses. Here, we set the signal intensity to be 1. This graph shows the key generation rate dependent on the decoy intensity. This graph is based on the calculation formula given in.55)

Because the raw keys are not necessarily secure when the channel has noise or two photons are transmitted, many studies have been done to find a way to guarantee security when the communication device has such imperfections. For this purpose, we need to consider a partial information leakage whose amount is bounded by the amount of the imperfection. Shor and Preskill41) and Mayers42) showed that privacy amplification generates secure final keys even when the channel has noise when the light source correctly generates a singlephoton. Gottesman et al.43) showed that these final keys can be secure even when the light source occasionally generates multiple photons if the fraction of multiple photon pulses is sufficiently is small. The light source used in the actual quantum optical communication is the weak coherent light, which probabilistically generates some multiple photon pulses, as shown in Fig. 9. Hence, this kind of extension had been required for practical use. Hwang44) proposed an efficient method to estimate the fraction of multiple photon pulses, called the decoy method, in which the sender randomly chooses pulses with different intensities.

Until this stage, the studies of QKD were mainly done by individual researchers. However, project style research is needed for a realization of QKD because the required studies need more interactions between theorists and experimentalists. A Japanese project, the ERATO Quantum Computation and Information Project, tackled the problem of guaranteeing the security of a real QKD system. Since this project contained an experimental group as well as theoretical groups, this project naturally proceeded to a series of studies of QKD from a more practical viewpoint. First, one project member, Hamada109),110) studied the relation between the quantum error correcting code and the security of QKD more deeply. Then, another project member, Wang46),48) extended the decoy method, which was developed independently by a group at Toronto University.45),47) Tsurumaru50) and the author49) have further extended the method. These extended decoy methods give a design for the choice of the intensity of transmitted pulses. Further, jointly with the Japanese company NEC, the experimental group demonstrated QKD with spools of standard telecom fiber over 100 km.111)

Here, we note that the theoretical results above assume the combination of error correction and privacy amplification for an infinitely large block-length in steps (5) and (6). They did not give a quantitative evaluation of the security with finite-block-length. They also did not address the construction of privacy amplification so these results are not sufficient for realization of a quantum key distribution system. To resolve this issue, as a member of this project, the author52) approximately evaluated the security with finite-block-length n when the channel has noise and the light source correctly generates a single photon. This idea has two key points. The first contribution is the evaluation of information leakage via the phase error probability of virtual error correction in the phase basis, which is summarized as item (i). This evaluation is based on the duality relation in quantum theory, which typically appears in the relation between position and momentum. The other contribution is the approximate evaluation of the phase error probability via the application of the central limit theorem, which is obtained by the combination of items (iii) and (iv). This analysis is essentially equivalent to the derivation of the coefficient of the transmission rate up to the second-order $\frac{1}{\sqrt{n} }$. However, this analysis assumed a single-photon source. Under this assumption, the author discussed the optimization for the ratio of check bits.112) Based on a strong request from the project leader of the ERATO project and helpful suggestions by the experimental group, using the decoy method, he extended a part of his analysis to the case when the light source sometimes generates multiple photons54) by replacing item (iv) by (iv′). Based on this analytical result, the ERATO project made an experimental demonstration of QKD with weak coherent pulses on a real optical fiber, whose security is quantitatively guaranteed in the Gaussian approximation.51)

Another Japanese project of the National Institute of Information and Communication Technology (NICT) has continuously made efforts toward a realization of QKD. After the ERATO project, the author joined the NICT project from 2011 to 2016. The NICT organized a project in Tokyo (Tokyo QKD Network) by connecting QKD devices operated by NICT, NEC, Mitsubishi Electric, NTT, Toshiba Research Europe, ID Quantique, the Austrian Institute of Technology, the Institute of Quantum Optics and Quantum Information and the University of Vienna in 2010.59) Also, as a part of the NICT project, NEC developed a QKD system, as shown in Fig. 8, and performed a long-term evaluation experiment in 2015.58)

After the above ERATO project, two main theoretical problems remained, and their resolutions had been strongly required by the NICT project because they are linked to the security evaluation of these installed QKD systems. The first one was the complete design of privacy amplification. Indeed, in the above security analysis based on the phase error probability, the range of possible random hash functions was not clarified. That is, only one example of a hash function was given in the paper,54) and we had only a weaker version of item (ii) at that time. To resolve this problem, as members of the NICT project, Tsurumaru and the author clarified what kind of hash functions can be used to guarantee the security of a QKD system,57) which yields the current item (ii). They introduced δ-almost dual universal2 hash functions, as explained in Section VI-B. In these studies, Tsurumaru taught the author the practical importance of the construction of hash functions from an industrial viewpoint based on his experience obtained as a researcher at Mitsubishi Electric.

The second problem was to remove the Gaussian approximation in52) from the finite-length analysis. Usually, security analysis requires rigorous evaluation without approximation. Hence, this requirement was essential for the security evaluation. In Hayashi and Tsurumaru,53) we succeeded in removing this approximation and obtained a rigorous security analysis for the single-photon case. Also, the paper53) clarified the security criterion and simplified the derivation in the discussion given in Subsection VI-C. Based on a strong request by the NICT project, the author extended the finite-length analysis to the case with multiple photons by employing the decoy method and performing a complicated statistical analysis.55) The transmission rate in the typical case is shown in Fig. 10. This study clarified the requirements for physical devices to apply the obtained security formula. In this study,55) the author also improved an existing decoy protocol. Under the improved protocol, he optimizes the choice of intensities.113) Finally, we should remark that only such a mathematical analysis can guarantee the security of QKD. This is quite similar to the situation that conventional security measures, like RSA, can be guaranteed by mathematical analysis of the computational complexity.108) In this way QKD is different from conventional communication technology.

Here, we should address the security analysis based on the leftover hash lemma62),63) as another research stream of QKD. This method came from cryptography theory and was started by the Renner group at the Swiss Federal Institute of Technology in Zurich (ETH).66) The advantage of this method is the direct evaluation of information leakage without needing to evaluate the virtual phase error probability. This method also enables a security analysis with finite-block-length.67) However, their finite-block-length analysis is looser than our analysis in Hayashi and Tsurumaru53) because their bound67) cannot yield the second-order rate based on the central limit theorem whereas it can be recovered from the bound in Hayashi and Tsurumaru.53) Further, while their method is potentially precise, it has very many parameters to be estimated in the finite-block-length analysis. Although their method improves the asymptotic generation rate,114) the increase in the number of parameters to be estimated enlarges the error of channel estimation in the finite-length setting. Hence, they need to decrease the number of parameters to be estimated. In their finite-block-length analysis, they simplified their analysis so that only the virtual phase error probability has to be estimated. This simplification improves the approach based on the leftover hash lemma because it gives a security evaluation based on the virtual phase error probability more directly. However, this approach did not consider security with weak coherent pulses. As another merit, the approach based on the leftover hash lemma later influenced the security analysis in the classical setting.76),96)–99)

To discuss the future of QKD, we now describe other QKD projects. Several projects were organized in Hefei in 2012 and in Jinan in 2013.60) In 2013, a US company, Battelle, implemented a QKD system for commercial use in Ohio using a device from ID Quantique.61) Battelle has a plan to establish a QKD system between Ohio and Washington, DC, over a distance of 700 km.61) Also, in China, the Beijing-Shanghai project almost established a QKD system connecting Shanghai, Hefei, Jinan, and Beijing with over a distance of 2,000 km.60) Indeed, these implemented QKD networks are composed of a collection of QKD communications over relatively short distance. However, quite recently, a Chinese group has succeeded in realizing a satellite for quantum communications. Since most of these developments are composed of networks of quantum communication channels, it is necessary to develop theoretical results to exploit the properties of quantum networks for a QKD system.

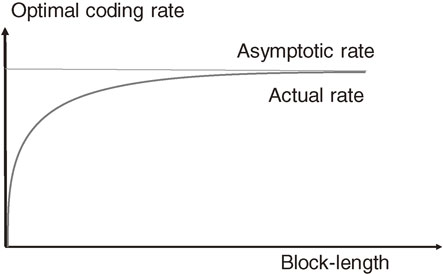

Now, we return to classical channel coding with the memoryless condition. In the channel coding, it is important to clarify the difference between the asymptotic transmission rate and the actual optimal transmission rate dependent on the block-length, as shown in Fig. 11. This is because many researchers had mistakenly thought that the actual optimal transmission rate equals the asymptotic transmission rate for a long time.

Relation between the asymptotic transmission rate and the actual transmission rate dependent on the block-length: Usually, the actual transmission rate is smaller than the asymptotic key generation rate. As the block-length increases, the actual transmission rate becomes closer to the asymptotic key generation rate.

When the channel PY|X is given as a binary additive noise subject to the distribution PZ and the channel $P_{Y^{n}|X^{n}}$ is the product distribution of the channel PY|X, the simple combination of [10] and [18] yields the asymptotic expansion of $M(\epsilon |P_{Y^{n}|X^{n}})$:

| \begin{align} M(\epsilon|P_{Y^{n}|X^{n}}) &= n(\log 2 - H(P_{Z})) \\ &\quad+ \sqrt{n} \sqrt{V(P_{Z})} \Phi^{-1}(\epsilon) + o(\sqrt{n}) \end{align} | [30] |

| \begin{equation} M(\epsilon|P_{Y^{n}|X^{n}}) = \begin{cases} n\max_{P_{X}}I(P_{X},P_{Y|X}) \\ \quad{}+{} \sqrt{n}\sqrt{V_{-}(P_{Y|X})} \Phi^{-1}(\epsilon) \\ \quad{}+{} o(\sqrt{n}) \quad\text{if $\epsilon < \dfrac{1}{2}$}\\ n\max_{P_{X}}I(P_{X},P_{Y|X}) \\ \quad{}+{} \sqrt{n}\sqrt{V_{+}(P_{Y|X})} \Phi^{-1}(\epsilon) \\ \quad{}+{} o(\sqrt{n}) \quad\text{if $\epsilon \geq \dfrac{1}{2}$} \end{cases} , \end{equation} | [31] |

| \begin{align} {V_{+}(P_{Y|X})} &:= {\max_{P_{X}}\sum_{x}P_{X}(x) \sum_{y}P_{Y|X}(y|x)}\\ &\quad {\cdot \biggl(\log\frac{P_{Y|X}(y|x)}{P_{Y}(y)} - D(P_{Y|X = x}\|P_{Y})\biggr)^{2}} \end{align} | [32] |

| \begin{align} {V_{-}(P_{Y|X})} &:= {\min_{P_{X}}\sum_{x}P_{X}(x)\sum_{y}P_{Y|X}(y|x)}\\ &\quad {\cdot \biggl(\log\frac{P_{Y|X}(y|x)}{P_{Y}(y)} - D(P_{Y|X = x}\|P_{Y})\biggr)^{2},} \end{align} | [33] |

To resolve this problem, we choose PX as the distribution realizing the minimum in [33] or the maximum in [32] and substitute $P_{X}^{n}$ into Qn in the formula [17]. Then, we can derive the ≤ part of the inequality [31]. Although this expansion was firstly derived by Strassen5) in 1962, this derivation is much simpler, which shows the effectiveness of the method of information spectrum.

The author applied this method to an additive white Gaussian noise channel and succeeded in deriving the second-order coefficient of its transmission rate, which had been unknown until that time; this was published in 2009.9) In fact, he obtained only a rederivation of Strassen’s result. When he presented this result in a domestic meeting,69) Uyematsu pointed out Strassen’s result. To go beyond Strassen’s result, he applied this idea to the additive white Gaussian noise channel, and obtained the following expansion, which appears as a typical situation in wireless communication.

| \begin{align} &\log M(\epsilon|S,N) \\ &\quad= \frac{n}{2}\log\left(1 + \frac{S}{N}\right) + \sqrt{n}\cfrac{\cfrac{S^{2}}{N^{2}} + \cfrac{2S}{N}}{2\biggl(1 + \cfrac{S}{N}\biggr)}\Phi^{-1}(\epsilon) + o(\sqrt{n}), \end{align} | [34] |

In fact, a group in Princeton University, mainly Verdú and Polyanskiy, tackled this problem independently. In their papers,6),7) they considered the relation between channel coding and simple statistical hypothesis testing, and independently derived two relations, the dependence test bound and the meta converse inequality, which are the same as in the classical special case considered in the author and Nagaoka33) and Nagaoka.34) Since their results6) are limited to the classical case, the applicable region of their results is narrower than that of the preceding results in.33),34) Then, Verdú and Polyanskiy rederived Strassen’s result, without use of the method of information spectrum, by the direct evaluation of these two bounds. They also independently derived the second-order coefficient of the optimal transmission rate for the additive white Gaussian noise channel in 2010.6) Since the result by this group at Princeton had a large impact in the information theory community at that time, their paper received the best paper award of IEEE Information theory society in 2011 jointly with the preceding paper by the author.9)

As explained above, the Japanese group obtained some of the same results several years before the Princeton group but had much weaker publicity than the Princeton group. Thus, the Princeton group met the demand in the information theory community, and they presented their results very effectively. In particular, since their research activity was limited to the information theory community, their audiences were suitably concentrated so that they could create a scientific boom in this direction. In contrast to the Princeton group, the Japanese group studied the same topic far from the demand of the community because their study originated in quantum information theory. In particular, their research funds were intended for the study of quantum information so they had to present their work to quantum information audiences who are less interested in their results. Also, because their work was across too wide a research area to explain their results effectively, they could not devote sufficient efforts to explain their results to the proper audiences at that time. Hence, their papers attracted less attention. For example, few Japanese researchers knew the paper9) when it received the IEEE award in 2011. After this award, this research direction became much more popular and was applied to very many topics in information theory.10)–14),17),71),72),76) In particular, the third-order analysis has been applied to channel coding.15) These activities were reviewed in a recent book.74)

Although this research direction is attracting much attention, we need to be careful about evaluating its practical impact. These studies consider finite-block-length analysis for the optimal rate with respect to all codes including those with too high a calculation complexity to implement. Hence, the obtained rate cannot necessarily be realized with implementable codes. To resolve this issue, we need to discuss the optimal rate among codes whose calculation complexity is not so high. Because no existing study discusses this type of finite-block-length analysis, such a study is strongly recommend for the future. Also, a realistic system is not necessarily memoryless; so, we need to discuss memory effects. To resolve this issue, jointly with Watanabe, the author extended this work to channels with additive Markovian noise, which covers the case when Markovian memory exists in the channel.71) While this model covers many types of realistic channel, it is not trivial to apply the results in71) to the realistic case of wireless communication because it is complicated to address the effect of fading in the coefficients. This is an interesting future problem.

After this breakthrough, the Princeton group extended their idea to many topics in channel coding and data compression.10),11),14),70) On the other hand, in addition to the above Markovian extension, the author, jointly with Tomamichel, extended this work to the quantum system,73) providing a unified framework for the second-order theory in the quantum system for data compression with side information, secure uniform random number generation, and simple hypothesis testing. At the same time, Li75) directly derived the second-order analysis for simple statistical hypothesis testing in the quantum case. However, the second-order theory for simple statistical hypothesis testing has less meaning in itself; it is more meaningful in relation to other topics in information theory.

The quantum cryptography explained above offers secure key distribution based on physical laws.

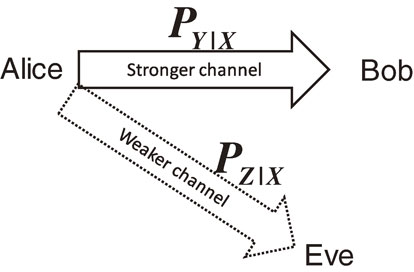

The classical counterpart of quantum cryptography is physical layer security, which offers information theoretical security based on several physical assumptions from classical mechanics. As its typical mode, Wyner77) formulated the wire-tap channel model, which was more deeply investigated by Csiszár and Köner.78) This model assumes two channels, as shown in Fig. 12: a channel PY|X from the authorized sender (Alice) to the authorized receiver (Bob) and a channel PZ|X from the authorized sender to the eavesdropper (Eve). When the original signal of Alice has stronger correlation with the received signal than that with Eve, that is, a suitable input distribution PX satisfies the condition $I(P_{X},P_{Y|X}) > I(P_{X},P_{Z|X})$, the authorized users can communicate without any information leakage by using a suitable code. More precisely, secure communication is available if and only if there exists a suitable joint distribution PVX between the input system $\mathcal{X}$ and another system $\mathcal{V}$ such that the condition $I(P_{V},P_{Y|V}) > I(P_{V},P_{Z|V})$ holds, where $P_{Y|V}(y|v): = \sum\nolimits_{x \in \mathcal{X}}P_{Y|X} (y|x)P_{X|V}(x|v)$ and PZ|V is defined in the same way.

Wire-tap channel model. Eve is assumed to have a weaker connection to Alice than Bob does.

Although we often assume that the channel is stationary and memoryless, the general setting can be discussed by using information spectrum.95) This paper explicitly pointed out that there is a relation between the wire-tap channel and the channel resolvability discussed in Section IV. This idea has been employed in many subsequent studies.126),138),139),142) Watanabe and the author123) discussed the second-order asymptotic for the channel resolvability. Also, extending the idea of the meta converse inequality to the wire-tap channel, Tyagi, Watanabe, and the author showed a relation between the wire-tap channel and hypothesis testing.125) Based on these results, Yang et al.124) investigated finite-block-length bounds for wiretap channels without Gaussian approximation. Also, taking into account the construction complexity, the author and Matsumoto [128, Section XI] proposed another type of finite-length analysis for wire-tap channels. Its quantum extension has also been discussed.117),118) However, in the wire-tap channel model, we need to assume that Alice and Bob know the channel PZ|X to Eve. Hence, although it is a typical model for information theoretic security, this model is somewhat unrealistic because Alice and Bob cannot identify Eve’s behavior. That is, it is assumed that Eve has weaker connection to Alice than Bob does, as shown in Fig. 12. So, it is quite hard to find a realistic situation where the original wire-tap channel model is applicable.

Fortunately, this model has more realistic derivatives: one is secret sharing,135),136) and another is secure network coding.81)–85),129) In secret sharing, there is one sender, Alice, and k receivers, Bob1, …, Bobk. Alice splits her information into k parts, and sends them to the respective Bobs such that a subset of Bobs cannot recover the original message. For example, assume that there are two Bobs, X1 is the original message and X2 is an independent uniform random number. If Alice sends the exclusive or of X1 and X2 to Bob1 and sends X2 to Bob2, neither Bob can recover the original message. When both Bobs cooperate, however, they can recover it. In the general case, for any given numbers k1 < k2 < k, we manage our code such that any set of k1 Bobs cannot recover the original message but any set of k2 Bobs can.130)

Secure network coding is a more difficult task. In secure network coding, Alice sends her information to the receiver via a network, and the information is transmitted to the receiver via intermediate links. That is, each intermediate link transfers a part of the information. Secure network coding is a method to guarantee security when some of the intermediate links are eavesdropped by Eve. Such a method can be realized by applying the wire-tap channel to the case when Eve obtains the information from some of intermediate links.81)–85),129) When each intermediate link has the same amount of information, the required task can be regarded as a special case of secret sharing.

However, this method depends on the structure of the network, and it is quite difficult for Alice to know this structure. Hence, it is necessary to develop a coding method that does not depend on the structure of the network. Such a coding is called universal secure network coding, and has been developed by several researchers.86),131)–134) These studies assume only that the information processes on each node are linear and the structure of network does not change during transmission. In particular, the security evaluation can be made even with finite-block-length codes.86),133),134) Since it is quite hard to tap all of the links, this kind of security is sufficiently useful for practical use by ordinary people based on the cost-benefit analysis of performance. To understand the naturalness of this kind of assumption, let us consider the daily-life case in which an important message is sent by dividing it into two e-mails, the first of which contains the message encrypted by a secure key, and the second one contains the secure key. This situation assumes that only one of two links is eavesdropped.

B. Secure key distillation.As another type of information theoretical security, Ahlswede and Csiszár80) and Maurer79) proposed secure key distillation. Assume that two authorized users, Alice and Bob, have random variables X and Y, and the eavesdropper, Eve, has another random variable Z. When the mutual information $I(X;Y)$ between Alice and Bob is larger than the mutual information $I(X;Z)$ or $I(Y;Z)$ between one authorized user and Eve, and when their information is given as the n-fold iid distribution of a given joint distribution PXYZ, Alice and Bob can extract secure final keys.

Recently, secure key distillation has been developed in a more practical way by importing the methods developed for or motivated by quantum cryptography.38),76),96)–100) In particular, its finite-block-length analysis has been much developed, including the Markovian case, when Alice’s random variable agrees with Bob’s random variable.76),96)–99) Such a analysis has been extended to a more general case in which Alice’s random variable does not necessarily agree with Bob’s random variable.127) Although some of the random hash functions were originally constructed for quantum cryptography, they can be used for privacy amplification even in secure key distillation.56),64),65) Hence, under several natural assumptions for secure key distillation, it is possible to precisely evaluate the security based on finite-block-length analysis.

We assume that X is a binary information, and all information is given as the n-fold iid distribution of a given joint distribution PXYZ. In this case, the protocol is essentially given by steps (5) and (6) of QKD, where the code C, its dimension k, and the sacrifice bit length $\bar{k}$ are determined a priori according to the joint distribution PXYZ. Now, we denote the information exchanged via the public channel by u and its distribution by Ppub. The security is evaluated by the criterion;

| \begin{align} \gamma (C,\{f_{r}\}) &:= \frac{1}{2}\sum_{u}\sum_{r}P_{R}(r)P_{\text{pub}}(u) \\ &\quad\cdot\sum_{x \in \mathbb{F}_{2}^{k - \bar{k}}}\sum_{z \in \mathcal{Z}^{n}}|P_{f_{r}(X^{n})Z^{n}|U}(x,z|u) \\ &\quad- P_{\mathbb{F}_{2}^{k - \bar{k}},\text{mix}}(x)P_{Z^{n}|U}(z|u)|, \end{align} | [35] |

| \begin{align} &{H_{1 + s}(X|Z|P_{XZ})} \\ &\quad {:= -\frac{1 + s}{s}\log\left(\sum_{z \in \mathcal{Z}}P_{Z}(z)\left(\sum_{x \in \mathcal{X}}P_{X|Z}(x|z)^{1 + s}\right)^{\frac{1}{1 + s}}\right).} \end{align} | [36] |

| \begin{align} &\gamma (C,\{f_{r}\}) \\ &\quad \leq \left(1 + \frac{\sqrt{\delta}}{2}\right)\min_{s\in [0,1]}e^{\frac{s}{1 + s}(n\log2 - \bar{k} - nH_{1 + s}(X|Z|P_{XZ}))}. \end{align} | [37] |